Fibre Channel: The #1 Choice for Mission-Critical Shared-Storage Connectivity

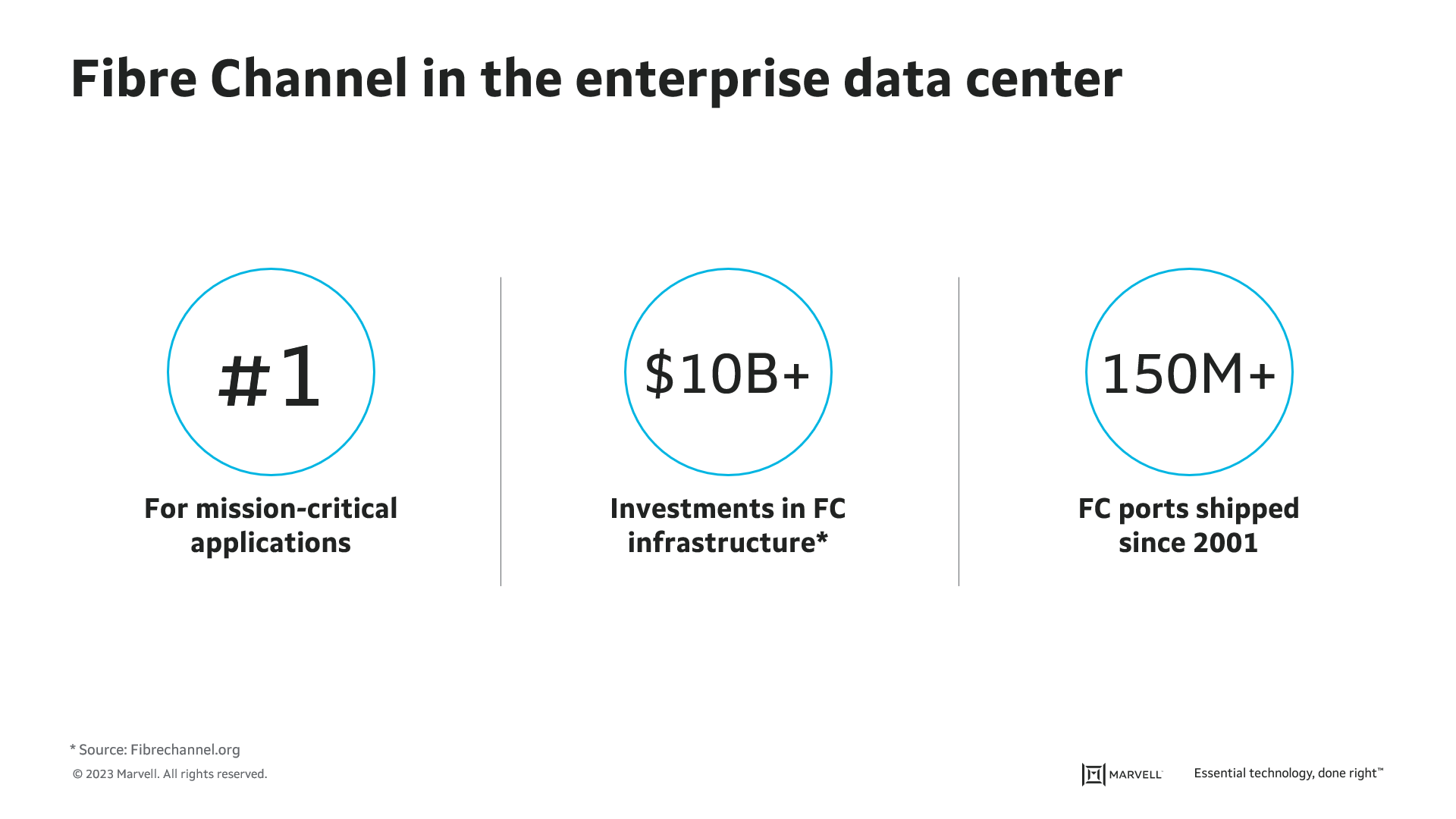

Here at Marvell, we talk frequently to our customers and end users about I/O technology and connectivity. This includes presentations on I/O connectivity at various industry events and delivering training to our OEMs and their channel partners. Often, when discussing the latest innovations in Fibre Channel, audience questions will center around how relevant Fibre Channel (FC) technology is in today’s enterprise data center. This is understandable as there are many in the industry who have been proclaiming the demise of Fibre Channel for several years. However, these claims are often very misguided due to a lack of understanding about the key attributes of FC technology that continue to make it the gold standard for use in mission-critical application environments.

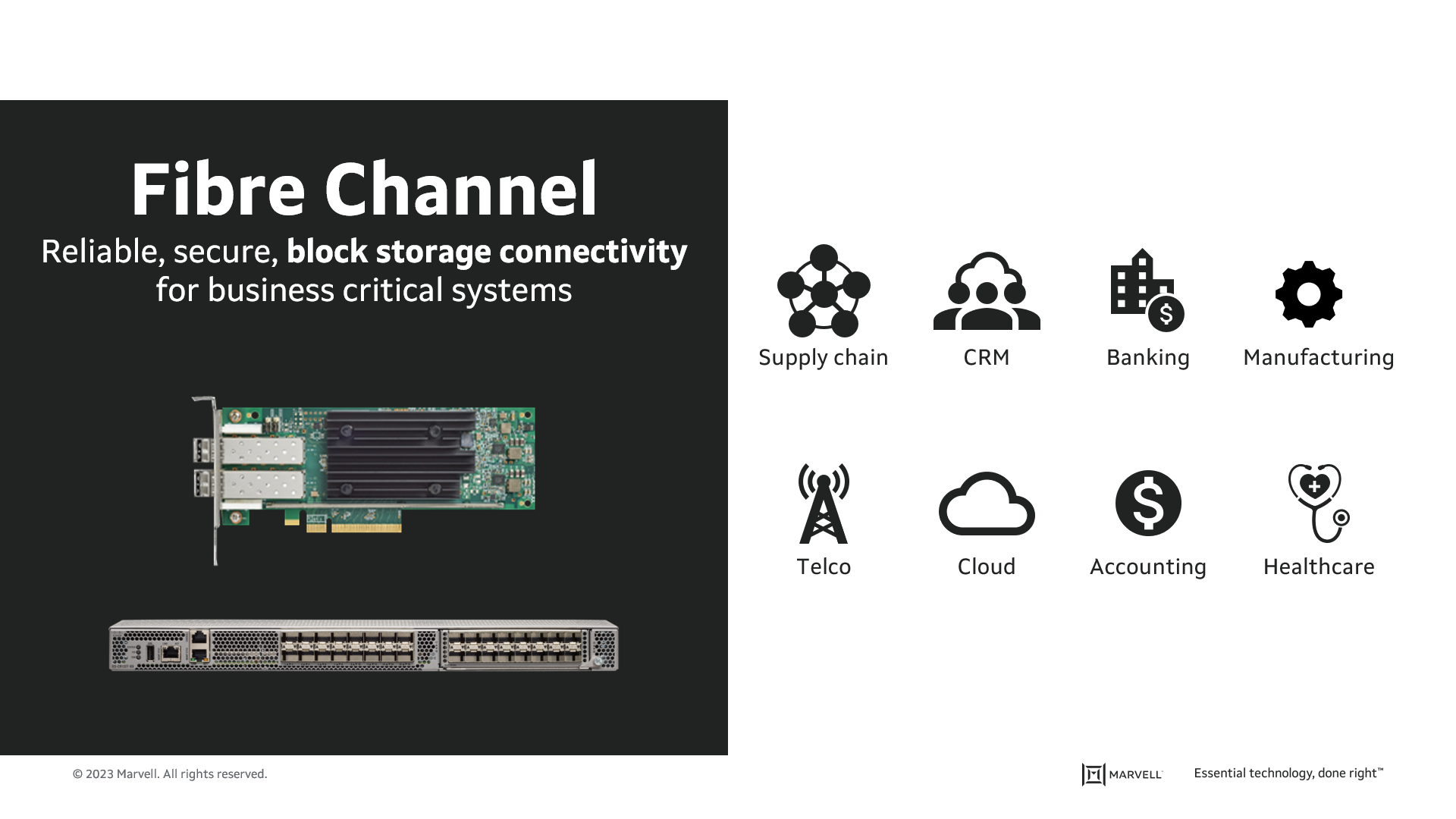

From inception several decades ago, and still today, FC technology is designed to do one thing, and one thing only: provide secure, high-performance, and high-reliability server-to-storage connectivity. While the Fibre Channel industry is made up of a select few vendors, the industry has continued to invest and innovate around how FC products are designed and deployed. This isn’t just limited to doubling bandwidth every couple of years but also includes innovations that improve reliability, manageability, and security.

FC is designed from the ground up to service storage connectivity, using a buffer credit mechanism to ensure reliable and in-order delivery of storage data between hosts and target storage devices. Furthermore, FC host bus adapters (HBAs) have always been fully offloaded, providing for more efficient and lower latency I/O communications. Also, FC storage area networks (SANs) are deployed within the data center in a fully redundant architecture, providing high-availability and secure air-gapped networks for mission-critical applications that require high-capacity data storage.

The Fibre Channel industry also continues to innovate how FC SANs are deployed and managed using standards-based technologies. Nameserver technology was incorporated into FC switches over a decade ago to greatly simplify device mapping and zoning. SAN administrators can identify and zone all devices in the storage network with easy-to-use drag and drop user interfaces in the FC switch management software. Compared to Ethernet, where there are hundreds of commands to execute to map and mask a small sized iSCSI deployment, the FC approach is much faster and less error prone.

Recent innovation includes automating SAN congestion mitigation by implementing Fabric Performance Impact Notification (FPIN) technology. With FPINs, FC switches communicate to the FC HBAs about congestion issues. The HBA firmware/driver can then take corrective action based on the notification received. This includes throttling down specific workloads or failing over from one port to another. Additionally, the industry has worked with VMware to implement virtual machine identification (VM-ID) technology into FC, which allows administrators to monitor and adjust the SAN performance on a per-VM basis. More recently, Fibre Channel has innovated to embrace NVMe over Fabrics with the introduction of FC-NVMe (NVMe over Fibre Channel), which further extends the value of FC to NVMe- based storage.

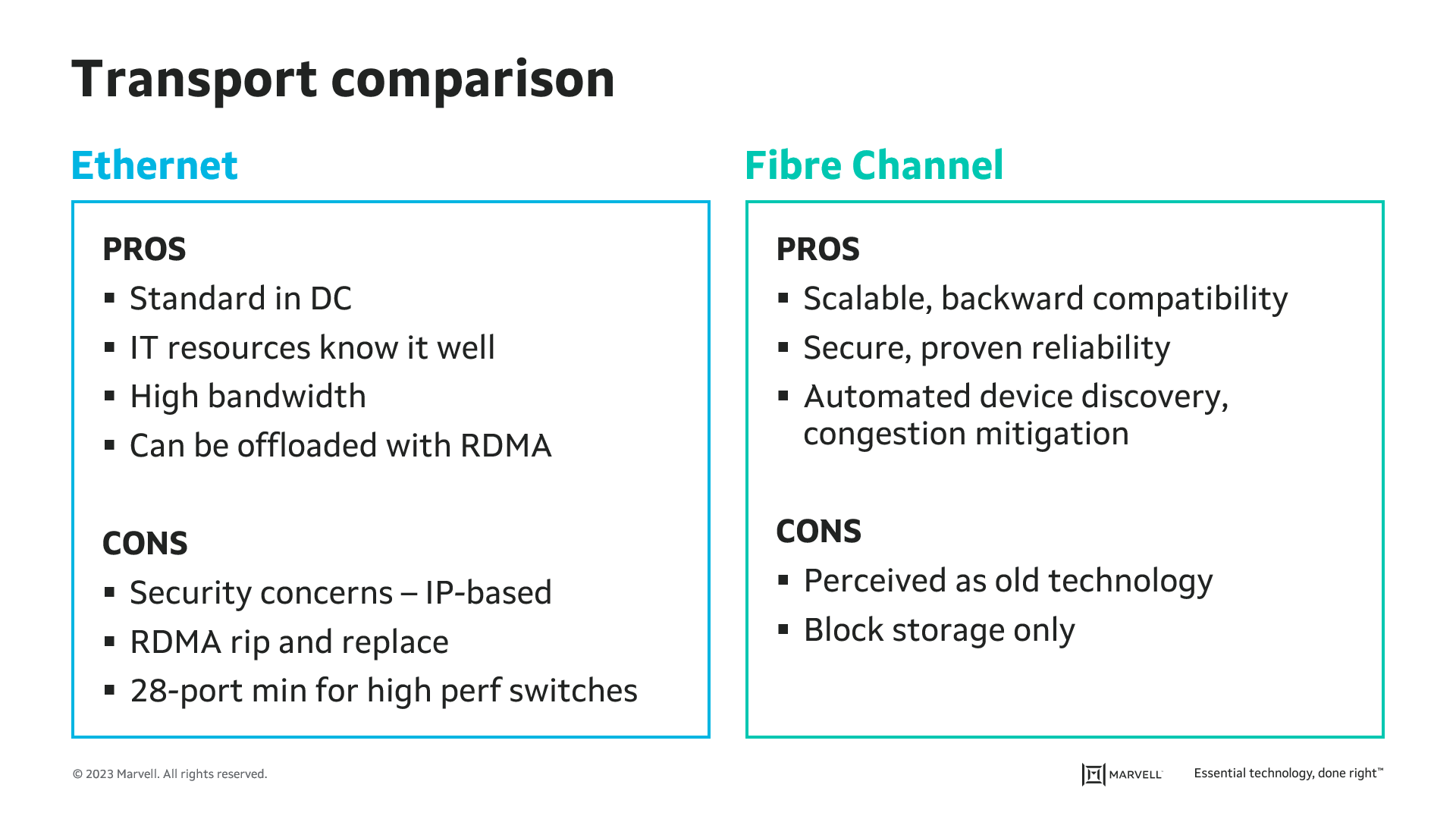

Many of those in the “Fibre Channel is dead” camp claim that Ethernet will replace FC for storage connectivity. The reality is that to make Ethernet do what FC can, there are many hurdles that need to be overcome. There is always a challenge when taking something that was designed for general purposes (Ethernet) and trying to make it mimic something designed for a specific purpose (Fibre Channel).

Data transmission reliability and latency continue to be a challenge when using Ethernet for storage networking. While leveraging remote direct memory access (RDMA) technology and deploying special functions like data center bridging (DCB) can reduce the latency and improve data transmission reliability for Ethernet, these require standard NICs to be replaced with RDMA-enabled devices and necessitate complex configuration and management. These upgrades also reduce the scalability of an Ethernet network to no more than a single rack, compared to the data center-wide scale in which Fibre Channel is typically deployed.

With Ethernet, transitioning from 10GBASE-T to 25Gb Ethernet requires replacing the entire Ethernet infrastructure. Likewise, transitioning from 25Gb Ethernet to higher performance 100GbE (or higher) requires replacing optics, switches and NICs. FC technology, however, is backward compatible with two speed generations. This provides significant investment protection for the customer. Today, 16GFC HBAs and switches are compatible with older 4GFC and 8GFC devices, while 32GFC infrastructure supports both 8GFC and 16GFC. The latest 64GFC HBAs and switches are backward compatible with 16GFC and 32GFC as well, often using the same optical cables as earlier. Lastly, Ethernet switches are typically fixed port counts, with a minimum of 28-ports for 25GbE and above switches. You pay for all ports whether you need them or not. Fibre Channel switches are designed for pay-as-you grow and available in 12-port configurations which enable additional ports over time. This is particularly beneficial in smaller enterprise deployments.

For customers running mission-critical applications, their data is their business. This includes database workloads, financial systems, transportation reservation and tracking systems, medical databases, and financial applications among others. Access to this kind of mission-critical data stored on shared storage arrays must be highly available, performance must be consistent and predictable, and access must be very secure. You can’t achieve all of this with Ethernet connectivity. Only the FC protocol is designed to support these kinds of requirements, and it does so in a very efficient and economical way.

So, the next time you engage with your customers, and there is a need to access mission-critical data, think Fibre Channel. Sure, Ethernet is everywhere. It’s the connection to the internet. However, it is a protocol designed to support many different masters, and tradeoffs need to be made for different applications. For storage connectivity and when using Fibre Channel, there are no tradeoffs for security, reliability, performance, and manageability. That’s what Fibre Channel was designed for in the first place. Fibre Channel has been and will remain the gold-standard for server to shared-storage connectivity for at least the next decade, if not longer.

Learn more about what Fibre Channel can do for you at www.marvell.com/qlogic

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: data centers, data storage, Fibre Channel, Fibre Channel connectivity, storage connectivity

Recent Posts

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

- Improving AI Through CXL Part II: Lower Latency