Archive for the 'Cloud' Category

-

June 18, 2024

Custom Compute in the AI Era

This article is the final installment in a series of talks delivered Accelerated Infrastructure for the AI Era, a one-day symposium held by Marvell in April 2024.

AI demands are pushing the limits of semiconductor technology, and hyperscale operators are at the forefront of adoption—they develop and deploy leading-edge technology that increases compute capacity. These large operators seek to optimize performance while simultaneously lowering total cost of ownership (TCO). With billions of dollars on the line, many have turned to custom silicon to meet their TCO and compute performance objectives.

But building a custom compute solution is no small matter. Doing so requires a large IP portfolio, significant R&D scale and decades of experience to create the mix of ingredients that make up custom AI silicon. Today, Marvell is partnering with hyperscale operators to deliver custom compute silicon that’s enabling their AI growth trajectories.Why are hyperscale operators turning to custom compute?

Hyperscale operators have always been focused on maximizing both performance and efficiency, but new demands from AI applications have amplified the pressure. According to Raghib Hussain, president of products and technologies at Marvell, “Every hyperscaler is focused on optimizing every aspect of their platform because the order of magnitude of impact is much, much higher than before. They are not only achieving the highest performance, but also saving billions of dollars.”

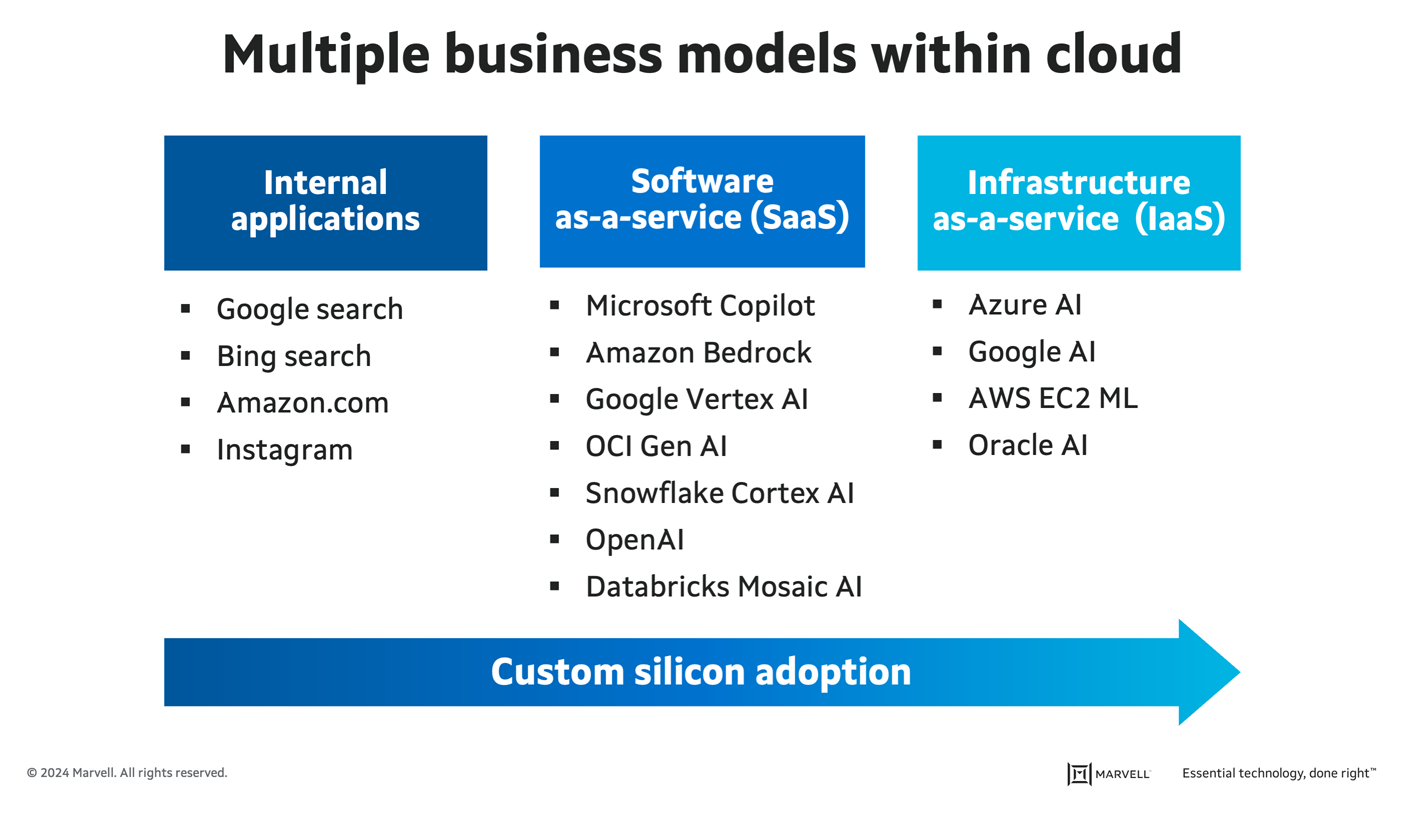

With multiple business models in the cloud, including internal apps, infrastructure-as-a-service (IaaS), and software-as-a-service (SaaS)—the latter of which is the fastest-growing market thanks to generative AI—hyperscale operators are constantly seeking ways to improve their total cost of ownership. Custom compute allows them to do just that. Operators are first adopting custom compute platforms for their mass-scale internal applications, such as search and their own SaaS applications. Next up for greater custom adoption will be third-party SaaS and IaaS, where the operator offers their own custom compute as an alternative to merchant options.

Progression of custom silicon adoption in hyperscale data centers.

-

June 11, 2024

How AI Will Drive Cloud Switch Innovation

This article is part five in a series on talks delivered at Accelerated Infrastructure for the AI Era, a one-day symposium held by Marvell in April 2024.

AI has fundamentally changed the network switching landscape. AI requirements are driving foundational shifts in the industry roadmap, expanding the use cases for cloud switching semiconductors and creating opportunities to redefine the terrain.

Here’s how AI will drive cloud switching innovation.

A changing network requires a change in scale

In a modern cloud data center, the compute servers are connected to themselves and the internet through a network of high-bandwidth switches. The approach is like that of the internet itself, allowing operators to build a network of any size while mixing and matching products from various vendors to create a network architecture specific to their needs.

Such a high-bandwidth switching network is critical for AI applications, and a higher-performing network can lead to a more profitable deployment.

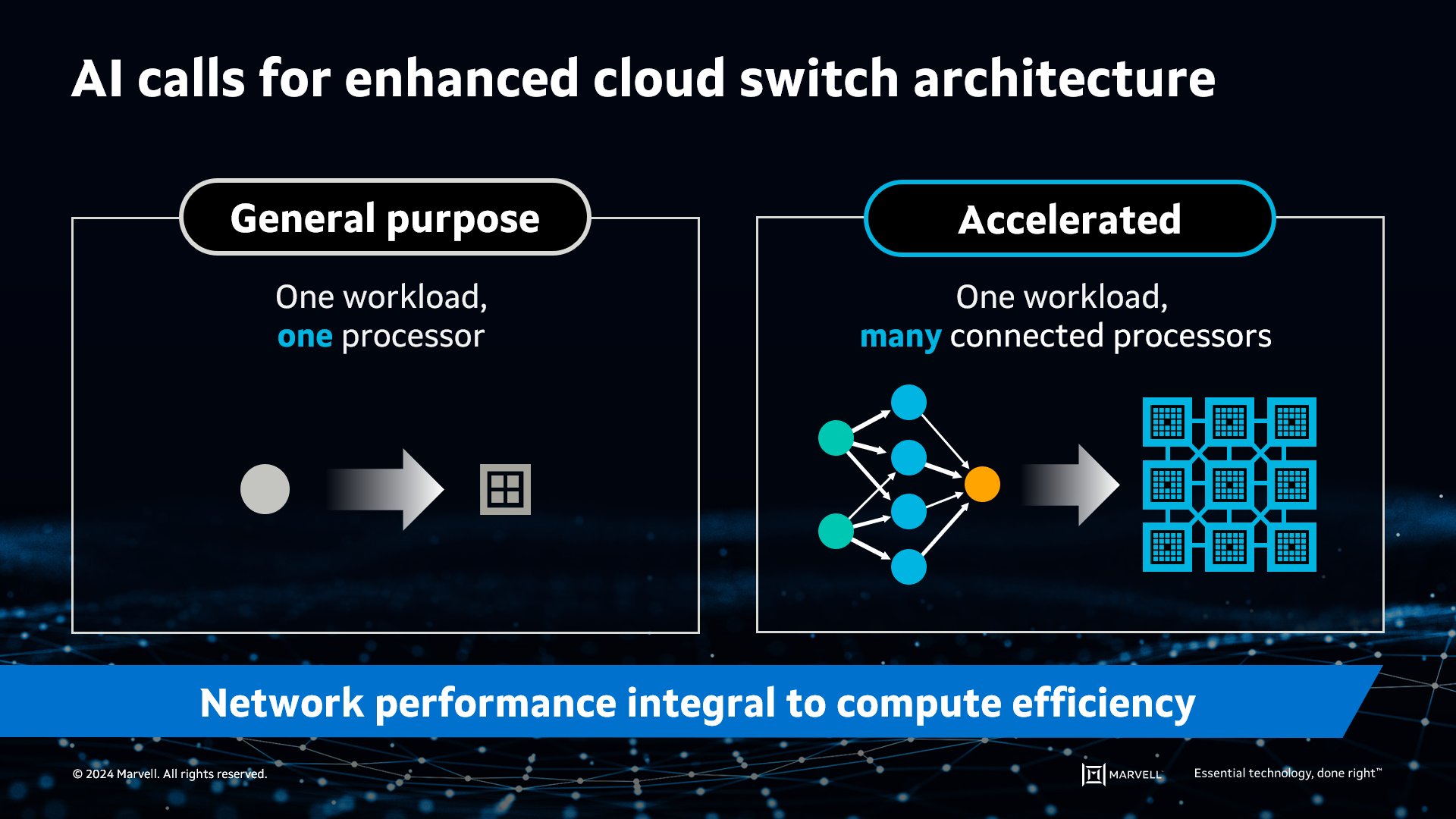

However, expanding and extending the general-purpose cloud network to AI isn’t quite as simple as just adding more building blocks. In the world of general-purpose computing, a single workload or more can fit on a single server CPU. In contrast, AI’s large datasets don’t fit on a single processor, whether it’s a CPU, GPU or other accelerated compute device (XPU), making it necessary to distribute the workload across multiple processors. These accelerated processors must function as a single computing element.

AI requires accelerated infrastructure to split workloads across many processors.

-

March 23, 2023

5Gネットワークの安全性は?

By Bill Hagerstrand, Security Solutions BU, Marvell

New Challenges and Solutions in an Open, Disaggregated Cloud-Native World

Time to grab a cup of coffee, as I describe how the transition towards open, disaggregated, and virtualized networks – also known as cloud-native 5G – has created new challenges in an already-heightened 4G-5G security environment.

5G networks move, process and store an ever-increasing amount of sensitive data as a result of faster connection speeds, mission-critical nature of new enterprise, industrial and edge computing/AI applications, and the proliferation of 5G-connected IoT devices and data centers. At the same time, evolving architectures are creating new security threat vectors. The opening of the 5G network edge is driven by O-RAN standards, which disaggregates the radio units (RU), front-haul, mid-haul, and distributed units (DU). Virtualization of the 5G network further disaggregates hardware and software and introduces commodity servers with open-source software running in virtual machines (VM’s) or containers from the DU to the core network.

As a result, these factors have necessitated improvements in 5G security standards that include additional protocols and new security features. But these measures alone, are not enough to secure the 5G network in the cloud-native and quantum computing era. This blog details the growing need for cloud-optimized HSMs (Hardware Security Modules) and their many critical 5G use cases from the device to the core network.

-

January 04, 2023

ソフトウェアで定義された自動車のためのソフトウェアで定義されたネットワーキング

マーベル、オートモーティブビジネスユニット、マーケティング担当バイスプレジデント、アミール・バー・ニヴ、ソナタス、および最高マーケティング責任者、ジョン・ハインライン、マーベル、オートモーティブビジネスユニット、SW担当副社長、サイモン・エーデルハウス著

ソフトウェアデファインド車両(SDV)は、自動車業界における最新かつ最も興味深いメガトレンドのひとつである。 以前のブログ で述べたように、この新しいアーキテクチャーとビジネス・モデルが成功する理由は、すべての利害関係者にメリットをもたらすからである:

- OEM(自動車メーカー)は、アフターマーケットサービスや新たなアプリケーションから新たな収入源を得るだろう。

- 車の所有者は、車の機能や特徴を簡単にアップグレードできる。

- モバイル通信事業者は、新しいアプリケーションによる車両データ消費の増加から利益を得るだろう。

ソフトウェアデファインド車両とは何か? 正式な定義はないが、この用語は、柔軟性と拡張性を可能にするため、車両設計におけるソフトウェアの使用方法の変化を反映している。 ソフトウェアデファインド車両をよりよく理解するためには、まず現在のアプローチを検証する必要がある。

今日の自動車機能を管理する組込み制御ユニット(ECU)にはソフトウェアが含まれているが、各ECUのソフトウェアは他のモジュールと互換性がなく、孤立していることが多い。 更新が必要な場合、車両の所有者はディーラーのサービスセンターに出向かなければならず、所有者は不便を強いられ、メーカーにとってはコストがかかる。

-

November 28, 2022

驚異のハック - SONiCユーザーの心をつかむ

By Kishore Atreya, Director of Product Management, Marvell

Recently the Linux Foundation hosted its annual ONE Summit for open networking, edge projects and solutions. For the first time, this year’s event included a “mini-summit” for SONiC, an open source networking operating system targeted for data center applications that’s been widely adopted by cloud customers. A variety of industry members gave presentations, including Marvell’s very own Vijay Vyas Mohan, who presented on the topic of Extensible Platform Serdes Libraries. In addition, the SONiC mini-summit included a hackathon to motivate users and developers to innovate new ways to solve customer problems.

So, what could we hack?

At Marvell, we believe that SONiC has utility not only for the data center, but to enable solutions that span from edge to cloud. Because it’s a data center NOS, SONiC is not optimized for edge use cases. It requires an expensive bill of materials to run, including a powerful CPU, a minimum of 8 to 16GB DDR, and an SSD. In the data center environment, these HW resources contribute less to the BOM cost than do the optics and switch ASIC. However, for edge use cases with 1G to 10G interfaces, the cost of the processor complex, primarily driven by the NOS, can be a much more significant contributor to overall system cost. For edge disaggregation with SONiC to be viable, the hardware cost needs to be comparable to that of a typical OEM-based solution. Today, that’s not possible.