Archive for the 'Ethernet Switching' Category

-

2023年9月11日

自動車用セントラルスイッチ クルマの進化の最新ステップ

マーベル、オートモーティブ・ビジネス・ユニット、マーケティング担当バイス・プレジデント、アミール・バー・ニヴ氏著

自動車を「走るデータセンター」と呼ぶのを耳にするとき、彼らは通常、オンデマンドストリーミングメディアや、運転体験を向上させるための新しいソフトウェアデファインドサービスなど、個人が自動車で強化されたデジタル機能をどのように体験するかを考えている。

しかし、この発言の裏には重要な意味が潜んでいる。自動車がデータセンターのような汎用性を必要とするタスクを担うには、データセンターのように構築する必要がある。自動車メーカーは、ハードウェアメーカーやソフトウェア開発者と共同で、ソフトウェアで定義された車両に必要な機能を提供すると同時に、消費電力とコストを最小限に抑えるために、同様のアーキテクチャコンセプトに基づいて連携する高度に専門化された技術のポートフォリオを開発する必要がある。バランスを取るのは簡単なことではない。

そこで、ゾーナルアーキテクチャ、特にゾーナルとそれに関連する車載用セントラルイーサネットスイッチの新しいカテゴリーの製品が登場することになる。 スピーカー、ビデオスクリーン、その他のインフォテインメント・デバイスはインフォテインメントECUにリンクし、パワートレインとブレーキはボディドメインに属し、ADASドメインはセンサーと高性能プロセッサーに基づいている。 帯域幅とセキュリティは、アプリケーションに適合させることができる。

-

March 02, 2023

Introducing the 51.2T Teralynx 10, the Industry’s Lowest Latency Programmable Switch

By Amit Sanyal, Senior Director, Product Marketing, Marvell

If you’re one of the 100+ million monthly users of ChatGPT—or have dabbled with Google’s Bard or Microsoft’s Bing AI—you’re proof that AI has entered the mainstream consumer market.

And what’s entered the consumer mass-market will inevitably make its way to the enterprise, an even larger market for AI. There are hundreds of generative AI startups racing to make it so. And those responsible for making these AI tools accessible—cloud data center operators—are investing heavily to keep up with current and anticipated demand.

Of course, it’s not just the latest AI language models driving the coming infrastructure upgrade cycle. Operators will pay equal attention to improving general purpose cloud infrastructure too, as well as take steps to further automate and simplify operations.

To help operators meet their scaling and efficiency objectives, today Marvell introduces Teralynx® 10, a 51.2 Tbps programmable 5nm monolithic switch chip designed to address the operator bandwidth explosion while meeting stringent power- and cost-per-bit requirements. It’s intended for leaf and spine applications in next-generation data center networks, as well as AI/ML and high-performance computing (HPC) fabrics.

A single Teralynx 10 replaces twelve of the 12.8 Tbps generation, the last to see widespread deployment. The resulting savings are impressive: 80% power reduction for equivalent capacity.

-

February 21, 2023

マーベルと Aviz Networks、クラウドおよび企業データセンターへの SONiC 導入を推進するために協力

By Kant Deshpande, Director, Product Management, Marvell

Disaggregation is the future

Disaggregation—the decoupling of hardware and software—is arguably the future of networking. Disaggregation lets customers select best-of-breed hardware and software, enabling rapid innovation by separating the hardware and software development paths.Disaggregation started with server virtualization and is being adapted to storage and networking technology. In networking, disaggregation promises that any networking operating system (NOS) can be integrated with any switch silicon. Open source-standards like ONIE allow a networking switch to load and install any NOS during the boot process.

SONiC: the Linux of networking OS

Software for Open Networking in Cloud (SONiC) has been gaining momentum as the preferred open-source cloud-scale network operating system (NOS).In fact, Gartner predicts that by 2025, 40% of organizations that operate large data center networks (greater than 200 switches) will run SONiC in a production environment.[i] According to Gartner, due to readily expanding customer interest and a commercial ecosystem, there is a strong possibility SONiC will become analogous to Linux for networking operating systems in next three to six years.

-

February 14, 2023

次世代データセンターがネットワークに求める3つのもの

By Amit Sanyal, Senior Director, Product Marketing, Marvell

Data centers are arguably the most important buildings in the world. Virtually everything we do—from ordinary business transactions to keeping in touch with relatives and friends—is accomplished, or at least assisted, by racks of equipment in large, low-slung facilities.

And whether they know it or not, your family and friends are causing data center operators to spend more money. But it’s for a good cause: it allows your family and friends (and you) to continue their voracious consumption, purchasing and sharing of every kind of content—via the cloud.

Of course, it’s not only the personal habits of your family and friends that are causing operators to spend. The enterprise is equally responsible. They’re collecting data like never before, storing it in data lakes and applying analytics and machine learning tools—both to improve user experience, via recommendations, for example, and to process and analyze that data for economic gain. This is on top of the relentless, expanding adoption of cloud services.

-

January 18, 2023

タイムセンシティブ・ネットワーキングを用いた産業用ネットワークにおけるネットワークの可視化

By Zvi Shmilovici Leib, Distinguished Engineer, Marvell

Industry 4.0 is redefining how industrial networks behave and how they are operated. Industrial networks are mission-critical by nature and have always required timely delivery and deterministic behavior. With Industry 4.0, these networks are becoming artificial intelligence-based, automated and self-healing, as well. As part of this evolution, industrial networks are experiencing the convergence of two previously independent networks: information technology (IT) and operational technology (OT). Time Sensitive Networking (TSN) is facilitating this convergence by enabling the use of Ethernet standards-based deterministic latency to address the needs of both the IT and OT realms.

However, the transition to TSN brings new challenges and requires fresh solutions for industrial network visibility. In this blog, we will focus on the ways in which visibility tools are evolving to address the needs of both IT managers and those who operate the new time-sensitive networks.

Why do we need visibility tools in industrial networks?

Networks are at the heart of the industry 4.0 revolution, ensuring nonstop industrial automation operation. These industrial networks operate 24/7, frequently in remote locations with minimal human presence. The primary users of the industrial network are not humans but, rather, machines that cannot “open tickets.” And, of course, these machines are even more diverse than their human analogs. Each application and each type of machine can be considered a unique user, with different needs and different network “expectations.”

-

November 08, 2022

TSNとプレステラDX1500: ITとOTの溝を越える架け橋

By Reza Eltejaein, Director, Product Marketing, Marvell

Manufacturers, power utilities and other industrial companies stand to gain the most in digital transformation. Manufacturing and construction industries account for 37 percent of total energy used globally*, for instance, more than any other sector. By fine-tuning operations with AI, some manufacturers can reduce carbon emission by up to 20 percent and save millions of dollars in the process.

Industry, however, remains relatively un-digitized and gaps often exist between operational technology – the robots, furnaces and other equipment on factory floors—and the servers and storage systems that make up a company’s IT footprint. Without that linkage, organizations can’t take advantage of Industrial Internet of Things (IIoT) technologies, also referred to as Industry 4.0. Of the 232.6 million pieces of fixed industrial equipment installed in 2020, only 10 percent were IIoT-enabled.

Why the gap? IT often hasn’t been good enough. Plants operate on exacting specifications. Engineers and plant managers need a “live” picture of operations with continual updates on temperature, pressure, power consumption and other variables from hundreds, if not thousands, of devices. Dropped, corrupted or mis-transmitted data can lead to unanticipated downtime—a $50 billion year problem—as well as injuries, blackouts, and even explosions.

To date, getting around these problems has required industrial applications to build around proprietary standards and/or complex component sets. These systems work—and work well—but they are largely cut off from the digital transformation unfolding outside the factory walls.

The new Prestera® DX1500 switch family is aimed squarely at bridging this divide, with Marvell extending its modern borderless enterprise offering into industrial applications. Based on the IEEE 802.1AS-2020 standard for Time-Sensitive Networking (TSN), Prestera DX1500 combines the performance requirements of industry with the economies of scale and pace of innovation of standards-based Ethernet technology. Additionally, we integrated the CPU and the switch—and in some models the PHY—into a single chip to dramatically reduce power, board space and design complexity.

Done right, TSN will lower the CapEx and OpEx for industrial technology, open the door to integrating Industry 4.0 practices and simplify the process of bringing new equipment to market. -

December 06, 2021

マーベルとIngrasys、データセンターのEBOFでCephクラスタを強化するために協業

By Khurram Malik, Senior Manager, Technical Marketing, Marvell

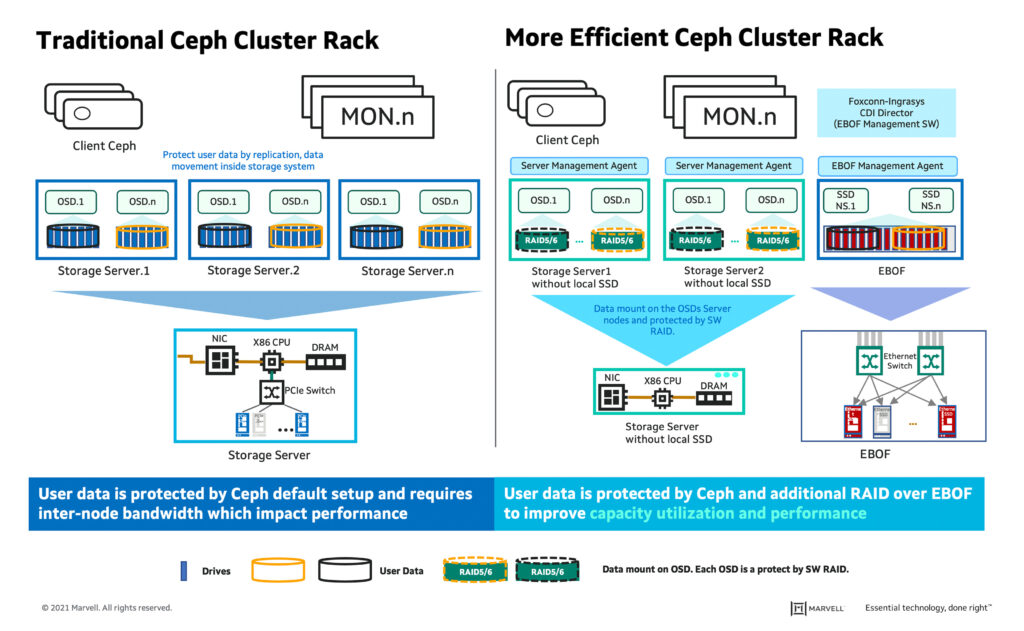

A massive amount of data is being generated at the edge, data center and in the cloud, driving scale out Software-Defined Storage (SDS) which, in turn, is enabling the industry to modernize data centers for large scale deployments. Ceph is an open-source, distributed object storage and massively scalable SDS platform, contributed to by a wide range of major high-performance computing (HPC) and storage vendors. Ceph BlueStore back-end storage removes the Ceph cluster performance bottleneck, allowing users to store objects directly on raw block devices and bypass the file system layer, which is specifically critical in boosting the adoption of NVMe SSDs in the Ceph cluster. Ceph cluster with EBOF provides a scalable, high-performance and cost-optimized solution and is a perfect use case for many HPC applications. Traditional data storage technology leverages special-purpose compute, networking, and storage hardware to optimize performance and requires proprietary software for management and administration. As a result, IT organizations neither scale-out nor make it feasible to deploy petabyte or exabyte data storage from a CAPEX and OPEX perspective.

Ingrasys (subsidiary of Foxconn) is collaborating with Marvell to introduce an Ethernet Bunch of Flash (EBOF) storage solution which truly enables scale-out architecture for data center deployments. EBOF architecture disaggregates storage from compute and provides limitless scalability, better utilization of NVMe SSDs, and deploys single-ported NVMe SSDs in a high-availability configuration on an enclosure level with no single point of failure.

Ceph is deployed on commodity hardware and built on multi-petabyte storage clusters. It is highly flexible due to its distributed nature. EBOF use in a Ceph cluster enables added storage capacity to scale up and scale out at an optimized cost and facilitates high-bandwidth utilization of SSDs. A typical rack-level Ceph solution includes a networking switch for client, and cluster connectivity; a minimum of 3 monitor nodes per cluster for high availability and resiliency; and Object Storage Daemon (OSD) host for data storage, replication, and data recovery operations. Traditionally, Ceph recommends 3 replicas at a minimum to distribute copies of the data and assure that the copies are stored on different storage nodes for replication, but this results in lower usable capacity and consumes higher bandwidth. Another challenge is that data redundancy and replication are compute-intensive and add significant latency. To overcome all these challenges, Ingrasys has introduced a more efficient Ceph cluster rack developed with management software – Ingrasys Composable Disaggregate Infrastructure (CDI) Director.

-

November 09, 2021

5G無線アクセス・ネットワークのネットワーク可視性、パート2

By Gidi Navon, Senior Principal Architect, Marvell

In part one of this blog, we discussed the ways the Radio Access Network (RAN) is dramatically changing with the introduction of 5G networks and the growing importance of network visibility for mobile network operators. In part two of this blog, we’ll delve into resource monitoring and Open RAN monitoring, and further explain how Marvell’s Prestera® switches equipped with TrackIQ visibility tools can ensure the smooth operation of the network for operators.

Resource monitoring

Monitoring latency is a critical way to identify problems in the network that result in latency increase. However, if measured latency is high, it is already too late, as the radio networks have already started to degrade. The fronthaul network, in particular, is sensitive to even a small increase in latency. Therefore, mobile operators need to ensure the fronthaul segment is below the point of congestion thus achieving extremely low latencies.

Visibility tools for Radio Access Networks need to measure the utilization of ports, making sure links never get congested. More precisely, they need to make sure the rate of the high priority queues carrying the latency sensitive traffic (such as eCPRI user plane data) is well below the allocated resources for such a traffic class.

A common mistake is measuring rates on long intervals. Imagine a traffic scenario over a 100GbE link, as shown in Figure 1, with quiet intervals and busy intervals. Checking the rate over long intervals of seconds will only reveal the average port utilization of 25%, giving the false impression that the network has high margins, without noticing the peak rate. The peak rate, which is close to 100%, can easily lead to egress queue congestion, resulting in buffer buildup and higher latencies.

-

October 18, 2021

5G無線アクセス・ネットワークのネットワーク可視性、パート1

By Gidi Navon, Senior Principal Architect, Marvell

The Radio Access Network (RAN) is dramatically changing with the introduction of 5G networks and this, in turn, is driving home the importance of network visibility. Visibility tools are essential for mobile network operators to guarantee the smooth operation of the network and for providing mission-critical applications to their customers.

In this blog, we will demonstrate how Marvell’s Prestera® switches equipped with TrackIQ visibility tools are evolving to address the unique needs of such networks.

The changing RAN

The RAN is the portion of a mobile system that spans from the cell tower to the mobile core network. Until recently, it was built from vendor-developed interfaces like CPRI (Common Public Radio Interface) and typically delivered as an end-to-end system by one RAN vendor in each contiguous geographic area.

Lately, with the introduction of 5G services, the RAN is undergoing several changes as shown in Figure 1 below:

-

October 11, 2021

次世代リテール・ネットワーキングの革新を牽引するトレンド

By Amit Thakkar, Senior Director, Product Management, Marvell

The retail segment of the global economy has been one of the hardest hit by the Covid-19 pandemic. Lockdowns shuttered stores for extended periods, while social distancing measures significantly impacted foot traffic in these spaces. Now, as consumer demand has shifted rapidly from physical to virtual stores, the sector is looking to reinvent itself and apply lessons learned from the pandemic. One important piece of knowledge that has surfaced across the retail industry: Investing in critical data infrastructure is a must in order to rapidly accommodate changes in consumption patterns.

Consumers have become much more conscious of the digital experience and, as such, prefer a seamless transition in shopping experiences across both virtual and brick-and-mortar stores. Retailers are revisiting investment in network infrastructure to ensure that the network is “future-proofed” to withstand consumer demand swings. It will be critical to offer new customer-focused, personalized experiences such as cashier-less stores and smart shopping in a manner that is secure, resilient, and high performance. Infrastructure companies will need to be able to bring a complete set of technology options to meet the digital transformation needs of the modern distributed enterprise.

Highlighted below are five emerging technology trends in enterprise networking that are driving innovations in the retail industry to build the modern store experience.

-

October 03, 2021

次世代イーサネットで5Gネットワークのパフォーマンスを引き出す

By Alik Fishman, Director of Product Management, Marvell

Blink your eyes. That’s how fast data will travel from your future 5G-enabled device, over the network to a server and back. Like Formula 1 racing cars needing special tracks for optimal performance, 5G requires agile networking transport infrastructure to unleash its full potential. The 5G radio access network (RAN) requires not only base stations with higher throughputs and soaring speeds but also an advanced transport network, capable of securely delivering fast response times to mobile end points, whatever those might be: phones, cars or IoT devices. Radio site densification and Massive Machine-type Communication (mMTC) technology are rapidly scaling the mobile network to support billions of end devices1, amplifying the key role of network transport to enable instant and reliable connectivity.

With Ethernet being adopted as the most efficient transport technology, carrier routers and switches are tasked to support a variety of use cases over shared infrastructure, driving the growth in Ethernet gear installations. In traditional cellular networks, baseband and radio resources were co-located and dedicated at each cell site. This created significant challenges to support growth and shifts in traffic patterns with available capacity. With the emergence of more flexible centralized architectures such as C-RAN, baseband processing resources are pooled in base station hubs called central units (CUs) and distributed units (DUs) and dynamically shared with remote radio units (RUs). This creates even larger concentrations of traffic to be moved to and from these hubs over the network transport. -

January 14, 2021

What’s Next in System Integration and Packaging? New Approaches to Networking and Cloud Data Center Chip Design

By Wolfgang Sauter, Customer Solutions Architect - Packaging, Marvel

The continued evolution of 5G wireless infrastructure and high-performance networking is driving the semiconductor industry to unprecedented technological innovations, signaling the end of traditional scaling on Single-Chip Module (SCM) packaging. With the move to 5nm process technology and beyond, 50T Switches, 112G SerDes and other silicon design thresholds, it seems that we may have finally met the end of the road for Moore’s Law.1 The remarkable and stringent requirements coming down the pipe for next-generation wireless, compute and networking products have all created the need for more innovative approaches. So what comes next to keep up with these challenges? Novel partitioning concepts and integration at the package level are becoming game-changing strategies to address the many challenges facing these application spaces.

During the past two years, leaders in the industry have started to embrace these new approaches to modular design, partitioning and package integration. In this paper, we will look at what is driving the main application spaces and how packaging plays into next-generation system architectures, especially as it relates to networking and cloud data center chip design.

-

November 01, 2020

ボーダレス・エンタープライズにおける優れたパフォーマンス - ホワイトペーパー

By Gidi Navon, Senior Principal Architect, Marvell

The current environment and an expected “new normal” are driving the transition to a borderless enterprise that must support increasing performance requirements and evolving business models. The infrastructure is seeing growth in the number of endpoints (including IoT) and escalating demand for data such as high-definition content. Ultimately, wired and wireless networks are being stretched as data-intensive applications and cloud migrations continue to rise. -

October 20, 2020

ボーダーレス・エンタープライズにおけるネットワークの可視性 ‐ ホワイトペーパー

By Gidi Navon, Senior Principal Architect, Marvell

-

July 28, 2020

ネットワークエッジでの生活 セキュリティ

By Alik Fishman, Director of Product Management, Marvell

In our series Living on the Network Edge, we have looked at the trends driving Intelligence, Performance and Telemetry to the network edge. In this installment, let’s look at the changing role of network security and the ways integrating security capabilities in network access can assist in effectively streamlining policy enforcement, protection, and remediation across the infrastructure.

Cybersecurity threats are now a daily struggle for businesses experiencing a huge increase in hacked and breached data from sources increasingly common in the workplace like mobile and IoT devices. Not only are the number of security breaches going up, they are also increasing in severity and duration, with the average lifecycle from breach to containment lasting nearly a year1 and presenting expensive operational challenges. With the digital transformation and emerging technology landscape (remote access, cloud-native models, proliferation of IoT devices, etc.) dramatically impacting networking architectures and operations, new security risks are introduced. To address this, enterprise infrastructure is on the verge of a remarkable change, elevating network intelligence, performance, visibility and security2.

-

July 23, 2020

テレメトリー: エッジが見えるか?

By Suresh Ravindran, Senior Director, Software Engineering

So far in our series Living on the Network Edge, we have looked at trends driving Intelligence and Performance to the network edge. In this blog, let’s look into the need for visibility into the network.

As automation trends evolve, the number of connected devices is seeing explosive growth. IDC estimates that there will be 41.6 billion connected IoT devices generating a whopping 79.4 zettabytes of data in 20251. A significant portion of this traffic will be video flows and sensor traffic which will need to be intelligently processed for applications such as personalized user services, inventory management, intrusion prevention and load balancing across a hybrid cloud model. Networking devices will need to be equipped with the ability to intelligently manage processing resources to efficiently handle huge amounts of data flows.

-

July 16, 2020

エッジでのスピードの必要性

寄稿 ジョージ ハーベイ, マーベルセミコンダクタ社 主任アーキテクト

In the previous TIPS to Living on the Edge, we looked at the trend of driving network intelligence to the edge. With the capacity enabled by the latest wireless networks, like 5G, the infrastructure will enable the development of innovative applications. These applications often employ a high-frequency activity model, for example video or sensors, where the activities are often initiated by the devices themselves generating massive amounts of data moving across the network infrastructure. Cisco’s VNI Forecast Highlights predicts that global business mobile data traffic will grow six-fold from 2017 to 2022, or at an annual growth rate of 42 percent1, requiring a performance upgrade of the network.

-

July 08, 2020

ネットワーク・インテリジェンスとプロセッシングをエッジへ

寄稿 ジョージ ハーベイ, マーベルセミコンダクタ社 主任アーキテクト

The mobile phone has become such an essential part of our lives as we move towards more advanced stages of the “always on, always connected” model. Our phones provide instant access to data and communication mediums, and that access influences the decisions we make and ultimately, our behavior.

According to Cisco, global mobile networks will support more than 12 billion mobile devices and IoT connections by 2022.1 And these mobile devices will support a variety of functions. Already, our phones replace gadgets and enable services. Why carry around a wallet when your phone can provide Apple Pay, Google Pay or make an electronic payment? Who needs to carry car keys when your phone can unlock and start your car or open your garage door? Applications now also include live streaming services that enable VR/AR experiences and sharing in real time. While future services and applications seem unlimited to the imagination, they require next-generation data infrastructure to support and facilitate them. -

April 01, 2019

データセンターのアーキテクチャーに革命をもたらし、コネクテッドインテリジェンスに新たな時代をもたらします

寄稿 ジョージ ハーベイ, マーベルセミコンダクタ社 主任アーキテクト

現在確立されているメガスケールのクラウドデータセンターのアーキテクチャは、長年にわたるグローバル規模のデータ要求に対してのサポートは十分でしたが、ここへきて根本的な変化が起きています。 たとえば 5G、産業オートメーション、スマートシティ、自律走行車などでは、ネットワークエッジからデータに直接アクセスできるようにする必要性が高まっています。データセンターには、消費電力の低減、低遅延、小型化、およびコンポーザブル・インフラストラクチャなどの新しい要件をサポートするための新しいアーキテクチャが必要です。

コンポーザビリティは、データストレージリソースの分離を提供することで、データセンターの要件を満たすためのより柔軟で効率的なプラットフォームを提供します。しかし、それをサポートするために最先端のスイッチソリューションが必須となります。 12.8Tbps で動作可能な Marvell®Prestera CX 8500 イーサネットスイッチ製品ファミリーには、データセンターアーキテクチャを再定義するための 2 つの重要な革新技術が用意されています。ひとつはテラビットイーサネットルーターのスライス(FASTER)テクノロジーを使用していること、そして2つ目は Storage Aware Flow Engine(SAFE)テクノロジーを使用した転送アーキテクチャーを採用したことです。

FASTER および SAFE テクノロジーにより、Marvell® Prestera CX 8500 ファミリーはネットワーク全体のコストを 50% 以上も削減します。加えてより低い消費電力、小型および低遅延であることも大切なポイントです。そしてフローごとの完全な可視性が備えられていることで、輻輳の問題が発生している場所を正確に特定することができます。

Marvell Prestera CX 8500デバイスがデータセンターアーキテクチャーに対して革新的とも言えるアプローチについて、以下のビデオでご覧いただくことができます。

-

March 06, 2019

コンポーザブル・インフラストラクチャー イーサネット スイッチングのエキサイティングな新展望

寄稿 ジョージ ハーベイ, マーベルセミコンダクタ社 主任アーキテクト

The data center networking landscape is set to change dramatically. More adaptive and operationally efficient composable infrastructure will soon start to see significant uptake, supplanting the traditional inflexible, siloed data center arrangements of the past and ultimately leading to universal adoption.

Composable infrastructure takes a modern software-defined approach to data center implementations. This means that rather than having to build dedicated storage area networks (SANs), a more versatile architecture can be employed, through utilization of NMVe and NVMe-over-Fabric protocols.

Whereas previously data centers had separate resources for each key task, composable infrastructure enables compute, storage and networking capacity to be pooled together, with each function being accessible via a single unified fabric. This brings far greater operational efficiency levels, with better allocation of available resources and less risk of over provisioning --- critical as edge data centers are introduced to the network, offering solutions for different workload demands.

Composable infrastructure will be highly advantageous to the next wave of data center implementations though the increased degree of abstraction that comes along presents certain challenges --- these are mainly in terms of dealing with acute network congestion --- especially in relation to multiple host scenarios. Serious congestion issues can occur, for example, when there are several hosts attempting to retrieve data from a particular part of the storage resource simultaneously. Such problems will be exacerbated in larger scale deployments, where there are several network layers that need to be considered and the degree of visibility is thus more restricted.

There is a pressing need for a more innovative approach to data center orchestration. A major streamlining of the network architecture will be required to support the move to composable infrastructure, with fewer network layers involved, thereby enabling greater transparency and resulting in less congestion.

This new approach will simplify data center implementations, thus requiring less investment in expensive hardware, while at the same time offering greatly reduced latency levels and power consumption.

Further, the integration of advanced analytical mechanisms is certain to be of huge value here as well --- helping with more effective network management and facilitating network diagnostic activities. Storage and compute resources will be better allocated to where there is the greatest need. Stranded capacity will no longer be a heavy financial burden.

Through the application of a more optimized architecture, data centers will be able to fully embrace the migration to composable infrastructure. Network managers will have a much better understanding of what is happening right down at the flow level, so that appropriate responses can be deployed in a timely manner. Future investments will be directed to the right locations, optimizing system utilization.

-

June 07, 2018

多機能な新しいイーサネットスイッチが複数の業界セクターに同時に対応

By Ran Gu, Marketing Director of Switching Product Line, Marvell

Due to ongoing technological progression and underlying market dynamics, Gigabit Ethernet (GbE) technology with 10 Gigabit uplink speeds is starting to proliferate into the networking infrastructure across a multitude of different applications where elevated levels of connectivity are needed: SMB switch hardware, industrial switching hardware, SOHO routers, enterprise gateways and uCPEs, to name a few. The new Marvell® Link Street™ 88E6393X, which has a broad array of functionality, scalability and cost-effectiveness, provides a compelling switch IC solution with the scope to serve multiple industry sectors.

The 88E6393X switch IC incorporates both 1000BASE-T PHY and 10 Gbps fiber port capabilities, while requiring only 60% of the power budget necessitated by competing solutions. Despite its compact package, this new switch IC offers 8 triple speed (10/100/1000) Ethernet ports, plus 3 XFI/SFI ports, and has a built-in 200 MHz microprocessor. Its SFI support means that the switch can connect to a fiber module without the need to include an external PHY - thereby saving space and bill-of-materials (BoM) costs, as well as simplifying the design. It complies with the IEEE 802.1BR port extension standard and can also play a pivotal role in lowering the management overhead and keeping operational expenditures (OPEX) in check. In addition, it includes L3 routing support for IP forwarding purposes.

Adherence to the latest time sensitive networking (TSN) protocols (such as 802.1AS, 802.1Qat, 802.1Qav and 802.1Qbv) enables delivery of the low latency operation mandated by industrial networks. The 256 entry ternary content-addressable memory (TCAM) allows for real-time, deep packet inspection (DPI) and policing of the data content being transported over the network (with access control and policy control lists being referenced). The denial of service (DoS) prevention mechanism is able to detect illegal packets and mitigate the security threat of DoS attacks.

Adherence to the latest time sensitive networking (TSN) protocols (such as 802.1AS, 802.1Qat, 802.1Qav and 802.1Qbv) enables delivery of the low latency operation mandated by industrial networks. The 256 entry ternary content-addressable memory (TCAM) allows for real-time, deep packet inspection (DPI) and policing of the data content being transported over the network (with access control and policy control lists being referenced). The denial of service (DoS) prevention mechanism is able to detect illegal packets and mitigate the security threat of DoS attacks. The 88E6393X device, working in conjunction with a high performance ARMADA® network processing system-on-chip (SoC), can offload some of the packet processing activities so that the CPU’s bandwidth can be better focused on higher level activities. Data integrity is upheld, thanks to the quality of service (QoS) support across 8 traffic classes. In addition, the switch IC presents a scalable solution. The 10 Gbps interfaces provide non-blocking uplink to make it possible to cascade several units together, thus creating higher port count switches (16, 24, etc.).

This new product release features a combination of small footprint, lower power consumption, extensive security and inherent flexibility to bring a highly effective switch IC solution for the SMB, enterprise, industrial and uCPE space.

-

February 22, 2018

マーベルはモバイル・ワールド・コングレス (MWC) 2018 イベントで、 Marvell ARMADA 8040 SoC と NFVTime ユニバーサル CPE OS を組み合わせたサイバータン・ホワイトボックス・ソリューションデモを行いました

By Maen Suleiman, Senior Software Product Line Manager, Marvel

As more workloads are moving to the edge of the network, Marvell continues to advance technology that will enable the communication industry to benefit from the huge potential that network function virtualization (NFV) holds. At this year’s Mobile World Congress (Barcelona, 26th Feb to 1st Mar 2018), Marvell, along with some of its key technology collaborators, will be demonstrating a universal CPE (uCPE) solution that will enable telecom operators, service providers and enterprises to deploy needed virtual network functions (VNFs) to support their customers’ demands.

The ARMADA® 8040 uCPE solution, one of several ARMADA edge computing solutions to be introduced to the market, will be located at the Arm booth (Hall 6, Stand 6E30) and will run Telco Systems NFVTime uCPE operating system (OS) with two deployed off-the-shelf VNFs provided by 6WIND and Trend Micro, respectively, that enable virtual routing and security functionalities. The CyberTAN white box solution is designed to bring significant improvements in both cost effectiveness and system power efficiency compared to traditional offerings while also maintaining the highest degrees of security.

CyberTAN white box solution incorporating Marvell ARMADA 8040 SoC The CyberTAN white box platform is comprised of several key Marvell technologies that bring an integrated solution designed to enable significant hardware cost savings. The platform incorporates the power-efficient Marvell® ARMADA 8040 system-on-chip (SoC) based on the Arm Cortex®-A72 quad-core processor, with up to 2GHz CPU clock speed, and Marvell E6390x Link Street® Ethernet switch on-board. The Marvell Ethernet switch supports 10G uplink and 8 x 1GbE ports along with integrated PHYs, four of which are auto-media GbE ports (combo ports).

The CyberTAN white box benefits from the Marvell ARMADA 8040 processor’s rich feature set and robust software ecosystem, including:

- both commercial and industrial grade offerings

- dual 10G connectivity, 10G Crypto and IPSEC support

- SBSA compliancy

- Arm TrustZone support

- broad software support from the following: UEFI, Linux, DPDK, ODP, OPTEE, Yocto, OpenWrt, CentOS and more

In addition, the uCPE platform supports Mini PCI Express (mPCIe) expansion slots that can enable Marvell advanced 11ac/11ax Wi-Fi or additional wired/wireless connectivity, up to 16GB DDR4 DIMM, 2 x M.2 SATA, one SATA and eMMC options for storage, SD and USB expansion slots for additional storage or other wired/wireless connectivity such as LTE.

At the Arm booth, Telco Systems will demonstrate its NFVTime uCPE operating system on the CyberTAN white box, with zero-touch provisioning (ZTP) feature. NFVTime is an intuitive NFVi-OS that facilitates the entire process of deploying VNFs onto the uCPE, and avoids the complex and frustrating management and orchestration activities normally associated with putting NFV-based services into action. The demonstration will include two main VNFs:

- A 6WIND virtual router VNF based on 6WIND Turbo Router which provides high performance, ready-to-use virtual routing and firewall functionality; and

- A Trend Micro security VNF based on Trend Micro’s Virtual Function Network Suite (VNFS) that offers elastic and high-performance network security functions which provide threat defense and enable more effective and faster protection.

Please contact your Marvell sales representative to arrange a meeting at Mobile World Congress or drop by the Arm booth (Hall 6, Stand 6E30) during the conference to see the uCPE solution in action.

-

September 18, 2017

データセンターをアップグレードする際のコスト効率をモジュラーネットワーキングによって改善

By Yaron Zimmerman, Senior Staff Product Line Manager, Marvell

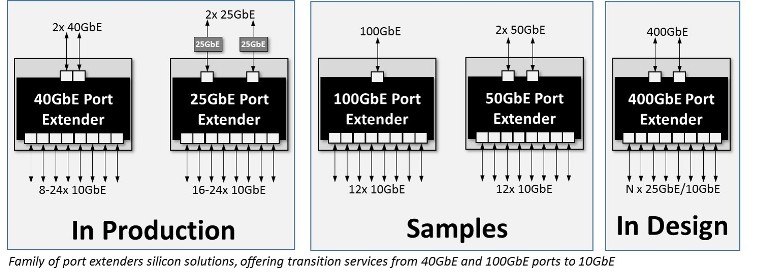

Exponential growth in data center usage has been responsible for driving a huge amount of investment in the networking infrastructure used to connect virtualized servers to the multiple services they now need to accommodate. To support the server-to-server traffic that virtualized data centers require, the networking spine will generally rely on high capacity 40 Gbit/s and 100 Gbit/s switch fabrics with aggregate throughputs now hitting 12.8 Tbit/s. But the ‘one size fits all’ approach being employed to develop these switch fabrics quickly leads to a costly misalignment for data center owners. They need to find ways to match the interfaces on individual storage units and server blades that have already been installed with the switches they are buying to support their scale-out plans.

The top-of-rack (ToR) switch provides one way to match the demands of the server equipment and the network infrastructure. The switch can aggregate the data from lower speed network interfaces and so act as a front-end to the core network fabric. But such switches tend to be far more complex than is actually needed - often derived from older generations of core switch fabric. They perform a level of switching that is unnecessary and, as a result, are not cost effective when they are primarily aggregating traffic on its way to the core network’s 12.8 Tbits/s switching engines. The heightened expense manifests itself not only in terms of hardware complexity and the issues of managing an extra network tier, but also in relation to power and air-conditioning. It is not unusual to find five or more fans inside each unit being used to cool the silicon switch. There is another way to support the requirements of data center operators which consumes far less power and money, while also offering greater modularity and flexibility too.

Providing a means by which to overcome the high power and cost associated with traditional ToR switch designs, the IEEE 802.1BR standard for port extenders makes it possible to implement a bridge between a core network interface and a number of port extenders that break out connections to individual edge devices. An attractive feature of this standard is the ability to allow port extenders to be cascaded, for even greater levels of modularity. As a result, many lower speed ports, of 1 Gbit/s and 10 Gbits/s, can be served by one core network port (supporting 40 Gbits/s or 100 Gbits/s operation) through a single controlling bridge device.

With a simpler, more modular approach, the passive intelligent port extender (PIPE) architecture that has been developed by Marvell leads to next generation rack units which no longer call for the inclusion of any fans for thermal management purposes. Reference designs have already been built that use a simple 65W open-frame power supply to feed all the devices required even in a high-capacity, 48-ports of 10 Gbits/s. Furthermore, the equipment dispenses with the need for external management. The management requirements can move to the core 12.8 Tbit/s switch fabric, providing further savings in terms of operational expenditure. It is a demonstration of exactly how a more modular approach can greatly improve the efficiency of today's and tomorrow's data center implementations.

-

July 17, 2017

イーサネット規模の最適化

寄稿 ジョージ ハーベイ, マーベルセミコンダクタ社 主任アーキテクト

Implementation of cloud infrastructure is occurring at a phenomenal rate, outpacing Moore's Law. Annual growth is believed to be 30x and as much 100x in some cases. In order to keep up, cloud data centers are having to scale out massively, with hundreds, or even thousands of servers becoming a common sight.

At this scale, networking becomes a serious challenge. More and more switches are required, thereby increasing capital costs, as well as management complexity. To tackle the rising expense issues, network disaggregation has become an increasingly popular approach. By separating the switch hardware from the software that runs on it, vendor lock-in is reduced or even eliminated. OEM hardware could be used with software developed in-house, or from third party vendors, so that cost savings can be realized.

Though network disaggregation has tackled the immediate problem of hefty capital expenditures, it must be recognized that operating expenditures are still high. The number of managed switches basically stays the same. To reduce operating costs, the issue of network complexity has to also be tackled.

Network Disaggregation

Almost every application we use today, whether at home or in the work environment, connects to the cloud in some way. Our email providers, mobile apps, company websites, virtualized desktops and servers, all run on servers in the cloud.

For these cloud service providers, this incredible growth has been both a blessing and a challenge. As demand increases, Moore's law has struggled to keep up. Scaling data centers today involves scaling out - buying more compute and storage capacity, and subsequently investing in the networking to connect it all. The cost and complexity of managing everything can quickly add up.

Until recently, networking hardware and software had often been tied together. Buying a switch, router or firewall from one vendor would require you to run their software on it as well. Larger cloud service providers saw an opportunity. These players often had no shortage of skilled software engineers. At the massive scales they ran at, they found that buying commodity networking hardware and then running their own software on it would save them a great deal in terms of Capex.

This disaggregation of the software from the hardware may have been financially attractive, however it did nothing to address the complexity of the network infrastructure. There was still a great deal of room to optimize further.

802.1BR

Today's cloud data centers rely on a layered architecture, often in a fat-tree or leaf-spine structural arrangement. Rows of racks, each with top-of-rack (ToR) switches, are then connected to upstream switches on the network spine. The ToR switches are, in fact, performing simple aggregation of network traffic. Using relatively complex, energy consuming switches for this task results in a significant capital expense, as well as management costs and no shortage of headaches.

Through the port extension approach, outlined within the IEEE 802.1BR standard, the aim has been to streamline this architecture. By replacing ToR switches with port extenders, port connectivity is extended directly from the rack to the upstream. Management is consolidated to the fewer number of switches which are located at the upper layer network spine, eliminating the dozens or possibly hundreds of switches at the rack level.

The reduction in switch management complexity of the port extender approach has been widely recognized, and various network switches on the market now comply with the 802.1BR standard. However, not all the benefits of this standard have actually been realized.

The Next Step in Network Disaggregation

Though many of the port extenders on the market today fulfill 802.1BR functionality, they do so using legacy components. Instead of being optimized for 802.1BR itself, they rely on traditional switches. This, as a consequence impacts upon the potential cost and power benefits that the new architecture offers.

Designed from the ground up for 802.1BR, Marvell's Passive Intelligent Port Extender (PIPE) offering is specifically optimized for this architecture. PIPE is interoperable with 802.1BR compliant upstream bridge switches from all the industry’s leading OEMs. It enables fan-less, cost efficient port extenders to be deployed, which thereby provide upfront savings as well as ongoing operational savings for cloud data centers. Power consumption is lowered and switch management complexity is reduced by an order of magnitude

The first wave in network disaggregation was separating switch software from the hardware that it ran on. 802.1BR's port extender architecture is bringing about the second wave, where ports are decoupled from the switches which manage them. The modular approach to networking discussed here will result in lower costs, reduced energy consumption and greatly simplified network management.

-

2017 年 5 月 31 日

ワイヤレス・オフィスのさらなる強化

By Yaron Zimmerman, Senior Staff Product Line Manager, Marvell

In order to benefit from the greater convenience offered for employees and more straightforward implementation, office environments are steadily migrating towards wholesale wireless connectivity. Thanks to this, office staff will no longer be limited by where there are cables/ports available, resulting in a much higher degree of mobility. It will mean that they can remain constantly connected and their work activities won’t be hindered - whether they are at their desk, in a meeting or even in the cafeteria. This will make enterprises much better aligned with our modern working culture - where hot desking and bring your own device (BYOD) are becoming increasingly commonplace.

The main dynamic which is going to be responsible for accelerating this trend will be the emergence of 802.11ac Wave 2 Wi-Fi technology. With the prospect of exploiting Gigabit data rates (thereby enabling the streaming of video content, faster download speeds, higher quality video conferencing, etc.), it is clearly going to have considerable appeal. In addition, this protocol offers extended range and greater bandwidth through multi-user MIMO operation - so that a larger number of users can be supported simultaneously. This will be advantageous to the enterprise, as less access points per users will be required.

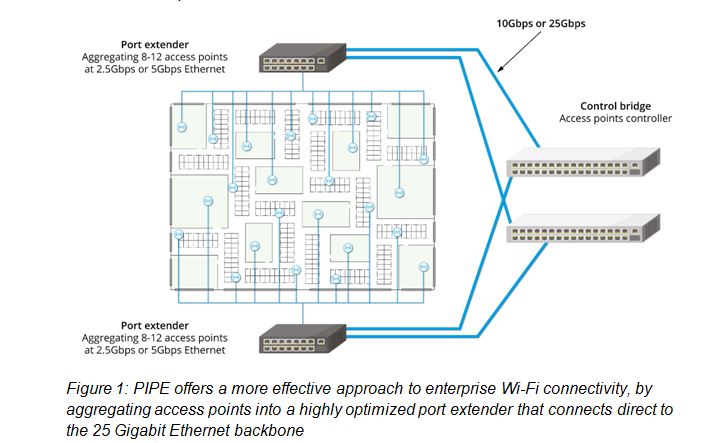

An example of the office floorplan for an enterprise/campus is described in Figure 1 (showing a large number of cubicles and also some meeting rooms too). Though scenarios vary, generally speaking an enterprise/campus is likely to occupy a total floor space of between 20,000 and 45,000 square feet. With one 802.11ac access point able to cover an area of 3000 to 4000 square feet, a wireless office would need a total of about 8 to 12 access points to be fully effective. This density should be more than acceptable for average voice and data needs. Supporting these access points will be a high capacity wireline backbone.

Increasingly, rather than employing traditional 10 Gigabit Ethernet infrastructure, the enterprise/campus backbone is going to be based on 25 Gigabit Ethernet technology. It is expected that this will see widespread uptake in newly constructed office buildings over the next 2-3 years as the related optics continue to become more affordable. Clearly enterprises want to tap into the enhanced performance offered by 802.11ac, but they have to do this while also adhering to stringent budgetary constraints too. As the data capacity at the backbone gets raised upwards, so will the complexity of the hierarchical structure that needs to be placed underneath it, consisting of extensive intermediary switching technology. Well that’s what conventional thinking would tell us.

Before embarking on a 25 Gigabit Ethernet/802.11ac implementation, enterprises have to be fully aware of what all this entails. As well as the initial investment associated with the hardware heavy arrangement just outlined, there is also the ongoing operational costs to consider. By aggregating the access points into a port extender that is then connecting directly to the 25 Gigabit Ethernet backbone instead towards a central control bridge switch, it is possible to significantly simplify the hierarchical structure - effectively eliminating a layer of unneeded complexity from the system.

Through its Passive Intelligent Port Extender (PIPE) technology Marvell is doing just that. This product offering is unique to the market, as other port extenders currently available were not originally designed for that purpose and therefore exhibit compromises in their performance, price and power. PIPE is, in contrast, an optimized solution that is able to fully leverage the IEEE 802.1BR bridge port extension standard - dispensing with the need for expensive intermediary switches between the control bridge and the access point level and reducing the roll-out costs as a result. It delivers markedly higher throughput, as the aggregating of multiple 802.11ac access points to 10 Gigabit Ethernet switches has been avoided. With fewer network elements to manage, there is some reduction in terms of the ongoing running costs too.

PIPE means that enterprises can future proof their office data communication infrastructure - starting with 10 Gigabit Ethernet, then upgrading to a 25 Gigabit Ethernet when it is needed. The number of ports that it incorporates are a good match for the number of access points that an enterprise/campus will need to address the wireless connectivity demands of their work force. It enables dual homing functionality, so that elevated service reliability and resiliency are both assured through system redundancy. In addition, supporting Power-over-Ethernet (PoE), allows access points to connect to both a power supply and the data network through a single cable - further facilitating the deployment process.

-

April 03, 2017

How the Introduction of the Cell Phone Sparked Today’s Data Demands

By Sander Arts, Interim VP of Marketing, Marvell

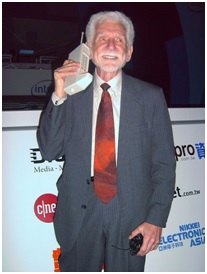

Almost 44 years ago on April 3, 1973, an engineer named Martin Cooper walked down a street in Manhattan with a brick-shaped device in his hand and made history’s very first cell phone call. Weighing an impressive 2.5 pounds and standing 11 inches tall, the world’s first mobile device featured a single-line, text-only LED display screen.

Credit: Wikipedia

A lot has changed since then. Phones have gotten smaller, faster and smarter, innovating at a pace that would have been unimaginable four decades ago. Today, phone calls are just one of the many capabilities that we expect from our mobile devices, in addition to browsing the internet, watching videos, finding directions, engaging in social media and more. All of these activities require the rapid movement and storage of data, drawing closer parallels to the original PC than Cooper’s 2.5 pound prototype. And that’s only the beginning – the demand for data has expanded far past mobile.

Data Demands: to Infinity and Beyond!

Today’s consumers can access content from around the world almost instantaneously using a variety of devices, including smartphones, tablets, cars and even household appliances. Whether it’s a large-scale event such as Super Bowl LI or just another day, data usage is skyrocketing as we communicate with friends, family and strangers across the globe sharing ideas, uploading pictures, watching videos, playing games and much more.

According to a study by Domo, every minute in the U.S. consumers use over 18 million megabytes of wireless data. At the recent 2017 OCP U.S. Summit, Facebook shared that over 95 million photos and videos are posted on Instagram every day – and that’s only one app. As our world becomes smarter and more connected, data demands will only continue to grow.

The Next Generation of Data Movement and Storage

At Marvell, we’re focused on helping our customers move and store data securely, reliably and efficiently as we transform data movement and storage across a range markets from the consumer to the cloud. With the staggering amount of data the world creates and moves every day, it’s hard to believe the humble beginnings of the technology we now take for granted.

What data demands will our future devices be tasked to support? Tweet us at @marvellsemi and let us know what you think!

-

March 17, 2017

Three Days, Two Speaking Sessions and One New Product Line: Marvell Sets the (IEEE 802.1BR) Standard for Data Center Solutions at the 2017 OCP U.S. Summit

By Michael Zimmerman, Vice President and General Manager, CSIBU, Marvell

At last week’s 2017 OCP U.S. Summit, it was impossible to miss the buzz and activity happening at Marvell’s booth. Taking our mantra #MarvellOnTheMove to heart, the team worked tirelessly throughout the week to present and demo Marvell’s vision for the future of the data center, which came to fruition with the launch of our newest Prestera® PX Passive Intelligent Port Extender (PIPE) family.

But we’re getting ahead of ourselves…

Marvell kicked off OCP with two speaking sessions from its leading technologists. Yaniv Kopelman, Networking CTO of the Networking Group, presented "Extending the Lifecycle of 3.2T Switches,” a discussion on the concept of port extender technology and how to apply it to future data center architecture. Michael Zimmerman, vice president and general manager of the Networking Group, then spoke on "Modular Networking" and teased Marvell's first modular solution based on port extender technology.

Marvell kicked off OCP with two speaking sessions from its leading technologists. Yaniv Kopelman, Networking CTO of the Networking Group, presented "Extending the Lifecycle of 3.2T Switches,” a discussion on the concept of port extender technology and how to apply it to future data center architecture. Michael Zimmerman, vice president and general manager of the Networking Group, then spoke on "Modular Networking" and teased Marvell's first modular solution based on port extender technology. Throughout the show, customers, media and attendees visited Marvell’s booth to see our breakthrough innovations that are leading the disaggregation of the cloud network infrastructure industry. These products included:

Marvell's Prestera PX PIPE family purpose-built to reduce power consumption, complexity and cost in the data center

Marvell’s 88SS1092 NVMe SSD controller designed to help boost next-generation storage and data center systems

Marvell’s Prestera 98CX84xx switch family designed to help data centers break the 1W per 25G port barrier for 25G Top-of-Rack (ToR) applications

Marvell’s ARMADA® 64-bit ARM®-based modular SoCs developed to improve the flexibility, performance and efficiency of servers and network appliances in the data center

Marvell’s Alaska® C 100G/50G/25G Ethernet transceivers which enable low-power, high-performance and small form factor solutions

We’re especially excited to introduce our PIPE solution on the heels of OCP because of the dramatic impact we anticipate it will have on the data center…

Until now, data centers with 10GbE and 25GbE port speeds have been challenged with achieving lower operating expense (OPEX) and capital expenditure (CAPEX) costs as their bandwidth needs increase. As the industry’s first purpose-built port extender supporting the IEEE 802.1BR standard, Marvell’s PIPE solution is a revolutionary approach that makes it possible to deploy ToR switches at half the power and cost of a traditional Ethernet switch.

Marvell’s PIPE solution enables data centers to be architected with a very simple, low-cost, low-power port extender in place of a traditional ToR switch, pushing the heavy switching functionality upstream. As the industry today transitions from 10GbE to 25GbE and from 40GbE to 100GbE port speeds, data centers are also in need of a modular building block to bridge the variety of current and next-generation port speeds. Marvell’s PIPE family provides a flexible and scalable solution to simplify and accelerate such transitions, offering multiple configuration options of Ethernet connectivity for a range of port speeds and port densities.

Amidst all of the announcements, speaking sessions and demos, our very own George Hervey, principal architect, also sat down with Semiconductor Engineering’s Ed Sperling for a Tech Talk. In the white board session, George discussed the power efficiency of networking in the enterprise and how costs can be saved by rightsizing Ethernet equipment.

The 2017 OCP U.S. Summit was filled with activity for Marvell, and we can’t wait to see how our customers benefit from our suite of data center solutions. In the meantime, we’re here to help with all of your data center needs, questions and concerns as we watch the industry evolve.

What were some of your OCP highlights? Did you get a chance to stop by the Marvell booth at the show? Tweet us at @marvellsemi to let us know, and check out all of the activity from last week. We want to hear from you!

-

March 13, 2017

ネットワークスイッチの業界を変えるポート・エクステンダー技術

寄稿 ジョージ ハーベイ, マーベルセミコンダクタ社 主任アーキテクト

Our lives are increasingly dependent on cloud-based computing and storage infrastructure. Whether at home, at work, or on the move with our smartphones and other mobile computing devices, cloud compute and storage resources are omnipresent. It is no surprise therefore that the demands on such infrastructure are growing at an alarming rate, especially as the trends of big data and the internet of things start to make their impact. With an increasing number of applications and users, the annual growth rate is believed to be 30x per annum, and even up to 100x in some cases. Such growth leaves Moore’s law and new chip developments unable to keep up with the needs of the computing and network infrastructure. These factors are making the data and communication network providers invest in multiple parallel computing and storage resources as a way of scaling to meet demands. It is now common for cloud data centers to have hundreds if not thousands of servers that need to be connected together.

Our lives are increasingly dependent on cloud-based computing and storage infrastructure. Whether at home, at work, or on the move with our smartphones and other mobile computing devices, cloud compute and storage resources are omnipresent. It is no surprise therefore that the demands on such infrastructure are growing at an alarming rate, especially as the trends of big data and the internet of things start to make their impact. With an increasing number of applications and users, the annual growth rate is believed to be 30x per annum, and even up to 100x in some cases. Such growth leaves Moore’s law and new chip developments unable to keep up with the needs of the computing and network infrastructure. These factors are making the data and communication network providers invest in multiple parallel computing and storage resources as a way of scaling to meet demands. It is now common for cloud data centers to have hundreds if not thousands of servers that need to be connected together. Interconnecting all of these compute and storage appliances is becoming a real challenge, as more and more switches are required. Within a data center a classic approach to networking is a hierarchical one, with an individual rack using a leaf switch – also termed a top-of-rack or ToR switch – to connect within the rack, a spine switch for a series of racks, and a core switch for the whole center. And, like the servers and storage appliances themselves, these switches all need to be managed. In the recent past there have usually been one or two vendors of data center network switches and the associated management control software, but things are changing fast. Most of the leading cloud service providers, with their significant buying power and technical skills, recognised that they could save substantial cost by designing and building their own network equipment. Many in the data center industry saw this as the first step in disaggregating the network hardware and the management software controlling it. With no shortage of software engineers, the cloud providers took the management software development in-house while outsourcing the hardware design. While that, in part, satisfied the commercial needs of the data center operators, from a technical and operational management perspective nothing has been simplified, leaving a huge number of switches to be managed.

The first breakthrough to simplify network complexity came in 2009 with the introduction of what we know now as a port extender. The concept rests on the belief that there are many nodes in the network that don’t need the extensive management capabilities most switches have. Essentially this introduces a parent/child relationship, with the controlling switch, the parent, being the managed switch and the child, the port extender, being fed from it. This port extender approach was ratified into the networking standard 802.1BR in 2012, and every network switch built today complies with this standard. With less technical complexity within the port extenders, there were perceived benefits that would come from lower per unit cost compared to a full bridge switch, in addition to power savings.

The controlling bridge and port extender approach has certainly helped to drive simplicity into network switch management, but that’s not the end of the story. Look under the lid of a port extender and you’ll find the same switch chip being used as in the parent bridge. We have moved forward, sort of. Without a chip specifically designed as a port extender switch vendors have continued to use their standard chips sets, without realising potential cost and power savings. However, the truly modular approach to network switching has taken a leap forward with the launch of Marvell’s 802.1BR compliant port extender IC termed PIPE – passive intelligent port extender, enabling interoperability with a controlling bridge switch from any of the industry’s leading OEMs. It also offers attractive cost and power consumption benefits, something that took the shine off the initial interest in port extender technology. Seen as the second stage of network disaggregation, this approach effectively leads to decoupling the port connectivity from the processing power in the parent switch, creating a far more modular approach to networking. The parent switch no longer needs to know what type of equipment it is connecting to, so all the logic and processing can be focused on the parent, and the port I/O taken care of in the port extender.

Marvell’s Prestera® PIPE family targets data centers operating at 10GbE and 25GbE speeds that are challenged to achieve lower CAPEX and OPEX costs as the need for bandwidth increases. The Prestera PIPE family will facilitate the deployment of top-of-rack switches at half the cost and power consumption of a traditional Ethernet switch. The PIPE approach also includes a fast fail over and resiliency function, essential for providing continuity and high availability to critical infrastructure.

-

January 20, 2017

新たなスケーリングのパラダイム: イーサネット・ポート・エクステンダー

By Michael Zimmerman, Vice President and General Manager, CSIBU, Marvell

Over the last three decades, Ethernet has grown to be the unifying communications infrastructure across all industries. More than 3M Ethernet ports are deployed daily across all speeds, from FE to 100GbE. In enterprise and carrier deployments, a combination of pizza boxes — utilizing stackable and high-density chassis-based switches — are used to address the growth in Ethernet. However, over the past several years, the Ethernet landscape has continued to change. With Ethernet deployment and innovation happening fastest in the data center, Ethernet switch architecture built for the data center dominates and forces adoption by the enterprise and carrier markets. This new paradigm shift has made architecture decisions in the data center critical and influential across all Ethernet markets. However, the data center deployment model is different.

How Data Centers are Different

Ethernet port deployment in data centers tends to be uniform, the same Ethernet port speed whether 10GbE, 25GbE or 50GbE is deployed to every server through a top of rack (ToR) switch, and then aggregated in multiple CLOS layers. The ultimate goal is to pack as many Ethernet ports at the highest commercially available speed onto the Ethernet switch, and make it the most economical and power efficient. The end point connected to the ToR switch is the server NIC which is typically the highest available speed in the market (currently 10/25GbE moving to 25/50GbE). Today, 128 ports of 25GbE switches are in deployment, going to 64x 100GbE and beyond in the next few years. But while data centers are moving to higher port density and higher port speeds, and homogenous deployment, there is still a substantial market for lower speeds such as 10GbE that continues to be deployed and must be served economically. The innovation in data centers drives higher density and higher port speeds but many segments of the market still need a solution with lower port speeds with different densities. How can this problem be solved?

Ethernet port deployment in data centers tends to be uniform, the same Ethernet port speed whether 10GbE, 25GbE or 50GbE is deployed to every server through a top of rack (ToR) switch, and then aggregated in multiple CLOS layers. The ultimate goal is to pack as many Ethernet ports at the highest commercially available speed onto the Ethernet switch, and make it the most economical and power efficient. The end point connected to the ToR switch is the server NIC which is typically the highest available speed in the market (currently 10/25GbE moving to 25/50GbE). Today, 128 ports of 25GbE switches are in deployment, going to 64x 100GbE and beyond in the next few years. But while data centers are moving to higher port density and higher port speeds, and homogenous deployment, there is still a substantial market for lower speeds such as 10GbE that continues to be deployed and must be served economically. The innovation in data centers drives higher density and higher port speeds but many segments of the market still need a solution with lower port speeds with different densities. How can this problem be solved? Bridging the Gap

Fortunately, the technology to bridge lower speed ports to higher density switches has existed for several years. The IEEE standards codified the 802.1br port extender standard as the protocol needed to allow a fan-out of ports from an originating higher speed port. In essence, one high end, high port density switch can fan out hundreds or even thousands of lower speed ports. The high density switch is the control bridge, while the devices which fan out the lower speed ports are the port extenders.

Why Use Port Extenders

In addition to re-packaging the data center switch as a control bridge, there are several unique advantages for using port extenders:

Port extenders are only a fraction of the cost, power and board space of any other solution aimed for serving Ethernet ports.

Port extenders have very little or no software. This simplified operational deployment results in the number of managed entities limited to only the high end control bridges.

Port extenders communicate with any high-end switch, via standard protocol 802.1br. Additional options such as Marvell DSA, or programmable headers are possible.)

Port extenders work well with any transition service: 100GbE to 10GbE ports, 400GbE to 25GbE ports, etc.

Port extenders can operate in any downstream speed: 1GbE, 2.5GbE, 10GbE, 25GbE, etc.

Port extenders can be oversubscribed or non-oversubscribed, which means the ratio of upstream bandwidth to downstream bandwidth can be programmable from 1:1 to 1:4 (depending on the application). This by itself can lower cost and power by a factor of 4x.

Marvell Port Extenders

Marvell has launched multiple purpose-built port extender products, which allow fan-out of 1GbE and 10GbE ports of 40GbE and 100GbE higher speed ports. Along with the silicon solution, software reference code is available and can be easily integrated to a control bridge. Marvell conducted interoperability tests with a variety of control bridge switches, including the leading switches in the market. The benchmarked design offers 2x cost reduction and 2x power savings. SDK, data sheet and design package are available. Marvell IEEE802.1br port extenders are shipping to the market now. Contact your sales representatives for more information.

-

January 09, 2017

イーサネットにまつわるダーウィン説: 適者生存 (高速な製品が生き残る)

By Michael Zimmerman, Vice President and General Manager, CSIBU, Marvell

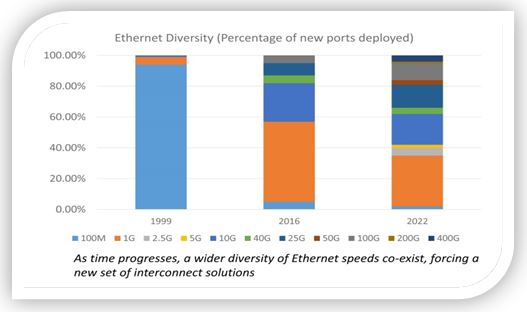

The most notable metric of Ethernet technology is the raw speed of communications. Ethernet has taken off in a meaningful way with 10BASE-T, which was used ubiquitously across many segments. With the introduction of 100BASE-T, the massive 10BASE-T installed base was replaced, showing a clear Darwinism effect of the fittest (fastest) displacing the prior and older generation. However, when 1000BASE-T (GbE – Gigabit Ethernet) was introduced, contrary to industry experts’ predictions, it did not fully displace 100BASE-T, and the two speeds have co-existed for a long time (more than 10 years). In fact, 100BASE-T is still being deployed in many applications. The introduction — and slow ramp — of 10GBASE-T has not impacted the growth of GbE, and it is only recently that GbE ports began consistently growing year over year. This trend signaled a new evolution paradigm of Ethernet: the new doesn’t replace the old, and the co-existence of multi variants is the general rule. The introduction of 40GbE and 25GbE augmented the wide diversity of Ethernet speeds, and although 25GbE was rumored to displace 40GbE, it is expected that 40GbE ports will still be deployed over the next 10 years1.

Hence, a new market reality evolved: there is less of a cannibalizing effect (i.e. newer speed cannibalizing the old), and more co-existence of multiple variants. This new diversity will require a set of solutions which allow effective support for multiple speed interconnect. Two critical capabilities will be needed:

Hence, a new market reality evolved: there is less of a cannibalizing effect (i.e. newer speed cannibalizing the old), and more co-existence of multiple variants. This new diversity will require a set of solutions which allow effective support for multiple speed interconnect. Two critical capabilities will be needed:経済的に少数のポートへとスケールダウンする能力2

Support of multiple Ethernet speeds

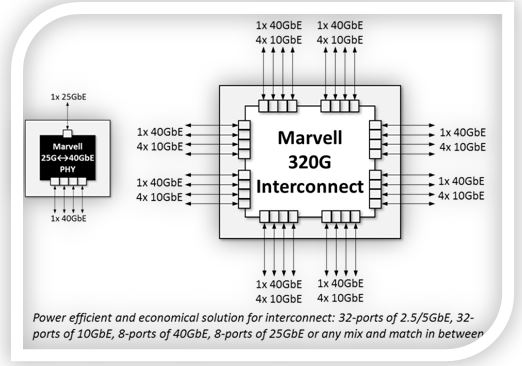

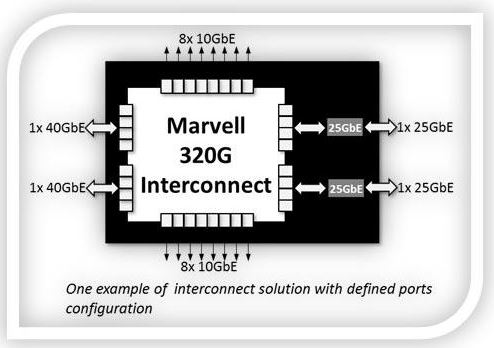

Marvell launched a new set of Ethernet interconnect solutions that meet this evolution pattern. The first products in the family are the Prestera® 98DX83xx 320G interconnect switch, and the Alaska® 88X5113 25G/40G Gearbox PHY. The 98DX83xx switch fans-out up to 32-ports of 10GbE or 8-ports of 40GbE, in economical 24x20mm package, with power of less than 0.5Watt/10G port.

The 88X5113 Gearbox converts a single port of 40GbE to 25GbE. The combination of the two devices creates unique connectivity configurations for a myriad of Ethernet speeds, and most importantly enables scale down to a few ports. While data center- scale 25GbE switches have been widely available for 64-ports, 128-ports (and beyond), a new underserved market segment evolved for a lower port count of 25GbE and 40GbE. Marvell has addressed this space with the new interconnect solution, allowing customers to configure any number of ports to different speeds, while keeping the power envelope to sub-20Watt, and a fraction of the hardware/thermal footprint of comparable data center solutions. The optimal solution to serve low port count connectivity of 10GbE, 25GbE, and 40GbE is now well addressed by Marvell. Samples and development boards with SDK are ready, with the option of a complete package of application software.

The 88X5113 Gearbox converts a single port of 40GbE to 25GbE. The combination of the two devices creates unique connectivity configurations for a myriad of Ethernet speeds, and most importantly enables scale down to a few ports. While data center- scale 25GbE switches have been widely available for 64-ports, 128-ports (and beyond), a new underserved market segment evolved for a lower port count of 25GbE and 40GbE. Marvell has addressed this space with the new interconnect solution, allowing customers to configure any number of ports to different speeds, while keeping the power envelope to sub-20Watt, and a fraction of the hardware/thermal footprint of comparable data center solutions. The optimal solution to serve low port count connectivity of 10GbE, 25GbE, and 40GbE is now well addressed by Marvell. Samples and development boards with SDK are ready, with the option of a complete package of application software.

In the mega data center market, there is cannibalization effect of former Ethernet speeds, and mass migration to higher speeds. However, in the broader market which includes private data centers, enterprise, carriers, multiple Ethernet speeds co-exist in many use cases.

Ethernet switches with high port density of 10GbE and 25GbE are generally available. However, these solutions do not scale down well to sub-24 ports, where there is pent-up demand for devices as proposed here by Marvell.

最新の記事

- HashiCorp and Marvell: Teaming Up for Multi-Cloud Security Management

- Cryptomathic and Marvell: Enhancing Crypto Agility for the Cloud

- The Big, Hidden Problem with Encryption and How to Solve It

- Self-Destructing Encryption Keys and Static and Dynamic Entropy in One Chip

- Dual Use IP: Shortening Government Development Cycles from Two Years to Six Months