By Todd Owens, Field Marketing Director, Marvell

Like a kid in a candy store, choose I/O wisely.

Remember as a child, a quick stop to the convenience store, standing in front of the candy aisle your parents saying, “hurry and pick one.” But with so many choices, the decision was often confusing. With time running out, you’d usually just grab the name-brand candy you were familiar with. But what were you missing out on? Perhaps now you realize there were more delicious or healthy offerings you could have chosen.

I use this as an analogy to discuss the choice of I/O technology for use in server configurations. There are lots of choices and it takes time to understand all the differences. As a result, system architects in many cases just fall back to the legacy name-brand adapter they have become familiar with. Is this the best option for their client though? Not always. Here’s some reasons why.

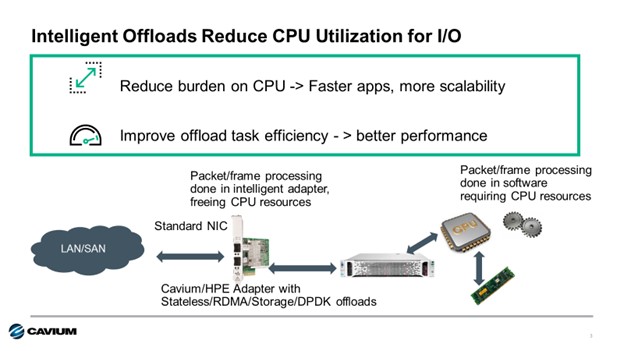

Some of today’s Ethernet adapters provide added capabilities that I refer to as “Intelligent I/O”. These adapters utilize a variety of offload technology and other capabilities to take on tasks associated with I/O processing that are typically done in software by the CPU when using a basic “standard” Ethernet adapter. Intelligent offloads include things like SR-IOV, RDMA, iSCSI, FCoE or DPDK. Each of these offloads the work to the adapter and, in many cases, bypasses the O/S kernel, speeding up I/O transactions and increasing performance.

As servers become more powerful and get packed with more virtual machines, running more applications, CPU utilizations of 70-80% are now commonplace. By using adapters with intelligent offloads, CPU utilization for I/O transactions can be reduced significantly, giving server administrators more CPU headroom. This means more CPU resources for applications or to increase the VM density per server.

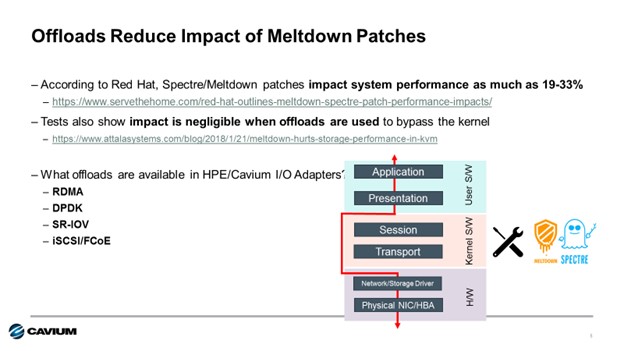

Another reason is to mitigate performance impact to the Spectre and Meltdown fixes required now for X86 server processors. The side channel vulnerability known as Spectre and Meltdown in X86 processors required kernel patches from the CPU vendor. These patches can have a significantly reduce CPU performance. For example, Red Hat reported the impact could be as much as a 19% performance degradation. That’s a big performance hit.

Storage offloads and offloads like SR-IOV, RDMA and DPDK all bypass the O/S kernel. Because they bypass the kernel, the performance impacts of the Spectre and Meltdown fixes are bypassed as well. This means I/O transactions with intelligent I/O adapters are not impacted by these fixes, and I/O performance is maximized.

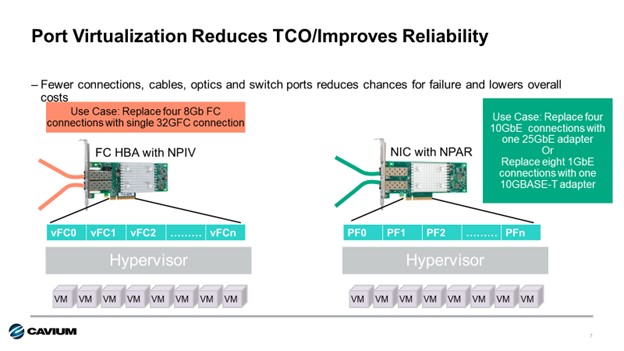

Finally, intelligent I/O can play a role in reducing cost and complexity and optimizing performance in virtual server environments. Some intelligent I/O adapters have port virtualization capabilities. Cavium Fibre Channel HBAs implement N-port ID Virtualization, or NPIV, to allow a single Fibre Channel port appear as multiple virtual Fibre Channel adapters to the hypervisor. For Cavium FastLinQ Ethernet Adapters, Network Partitioning, or NPAR, is utilized to provide similar capability for Ethernet connections. Up to eight independent connections can be presented to the host O/S making a single dual-port adapter look like 16 NICs to the operating system. Each virtual connection can be set to specific bandwidth and priority settings, providing full quality of service per connection.

The advantage of this port virtualization capability is two-fold. First, the number of cables and connections to a server can be reduced. In the case of storage, four 8Gb Fibre Channel connections can be replaced by a single 32Gb Fibre Channel connection. For Ethernet, eight 1GbE connections can easily be replaced by a single 10GbE connection and two 10GbE connections can be replaced with a single 25GbE connection, with 20% additional bandwidth to spare.

At HPE, there are more than fifty 10Gb-100GbE Ethernet adapters to choose from across the HPE ProLiant, Apollo, BladeSystem and HPE Synergy server portfolios. That’s a lot of documentation to read and compare. Cavium is proud to be a supplier of eighteen of these Ethernet adapters, and we’ve created a handy quick reference guide to highlight which of these offloads and virtualization features are supported on which adapters. View the complete Cavium HPE Ethernet Adapter Quick Reference guide here.

For Fibre Channel HBAs, there are fewer choices (only nineteen), but we make a quick reference document available for our HBA offerings at HPE as well. You can view the Fibre Channel HBA Quick Reference here.

In summary, when configuring HPE servers, think twice before selecting your I/O device. Choose an Intelligent I/O Adapter like those from HPE and Cavium. Cavium provides the broadest portfolio of intelligent Ethernet and Fibre Channel adapters for HPE Gen9 and Gen10 Servers and they support most, if not all, of the features mentioned in this blog. The best news is that the HPE/Cavium adapters are offered at the same or lower price than other products with fewer features. That means with HPE and Cavium I/O, you get more for less, and it just works too!

Copyright © 2026 Marvell, All rights reserved.