- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

Faster, Farther and Going Optical: How PCIe Is Accelerating the AI Revolution

For over 20 years, PCIe, or Peripheral Component Interconnect Express, has been the dominant standard to connect processors, NICs, drives and other components within servers thanks to the low latency and high bandwidth of the protocol as well as the growing expertise around PCIe across the technology ecosystem. It will also play a leading role in defining the next generation of computing systems for AI through increases in performance and combining PCIe with optics.

Here’s why:

PCIe Transitions Are Accelerating

Seven years passed between the debut of PCIe Gen 3 (8 gigatransfers/second—GT/s) in 2010 and the release of PCIe Gen 4 (16 GT/sec) in 2017.1 Commercial adoption, meanwhile, took closer to a full decade2

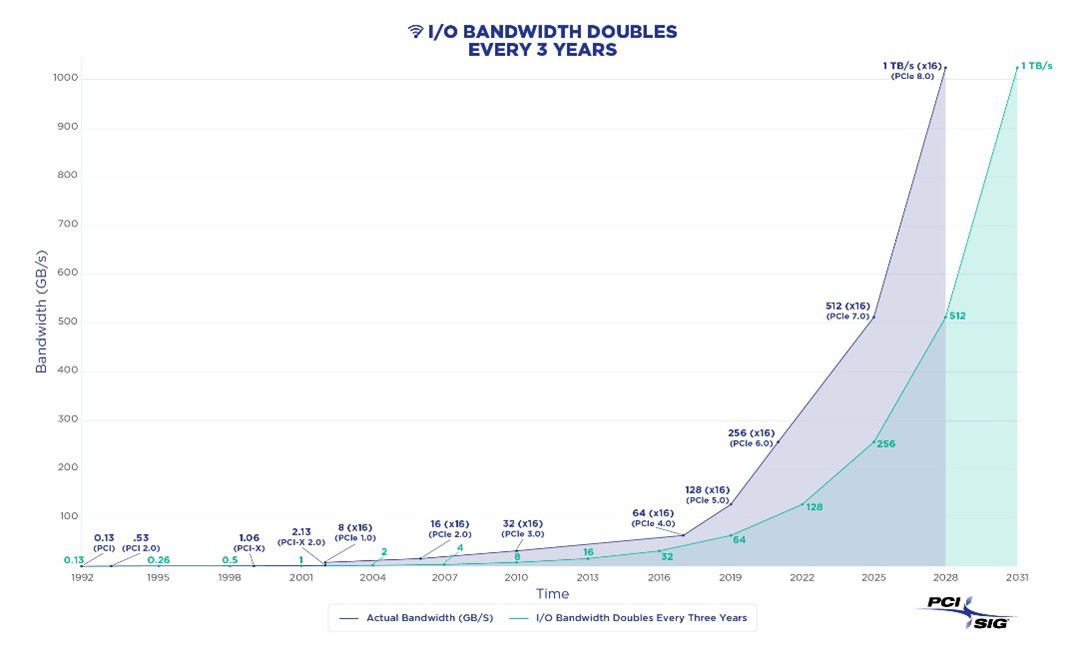

Toward a terabit (per second): PCIe standards are being developed and adopted at a faster rate to keep up with the chip-to-chip interconnect speeds needed by system designers.

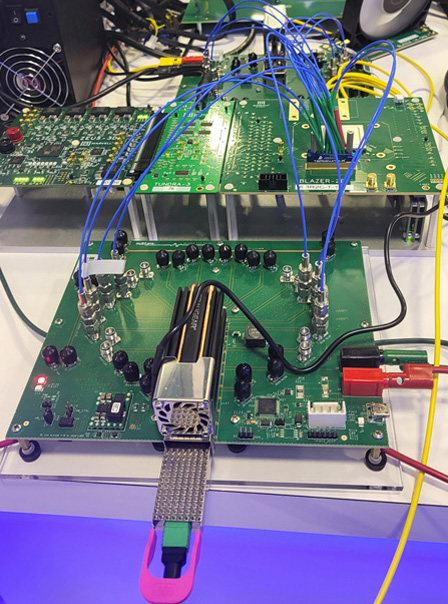

By contrast, only three years passed between the debut of PCIe 5.0 (32 GT/sec) and PCIe 6.0 (64 GT/sec) with the commercial adoption taking place at around the same time. Fast forward to this year: the first generation of PCIe 6.0 products are debuting at the same time the PCIe 7.0 specification (128 GT/sec) is being finalized.1 PCI Special Interest Group (SIG) has also started the prework of PCIe 8 spec.2 Marvell has demonstrated PCIe 7.0 pre-standard 128GT/s electrical interconnect at OCP24 and optical interconnect at OFC25.

A Marvell PCIe 7.0 SerDes running at 128 GT/s transmits signals through the TeraHop optical module at OFC 2025.

While the demand for greater bandwidth began in the cloud era, it has become far more urgent with AI. The accelerated pace of PCIe development—again, also with the growing expertise around the protocol—effectively provides a vehicle for delivering high bandwidth while maintaining low latency as compared to other communication protocols.

Links Are Getting Longer

Historically, PCIe links stayed within the boundary of a 1U to 2U (rack unit) server. That’s not the case anymore. Hyperscalers are deploying systems that fill an entire rack and are working on a system that will take up four or more racks.

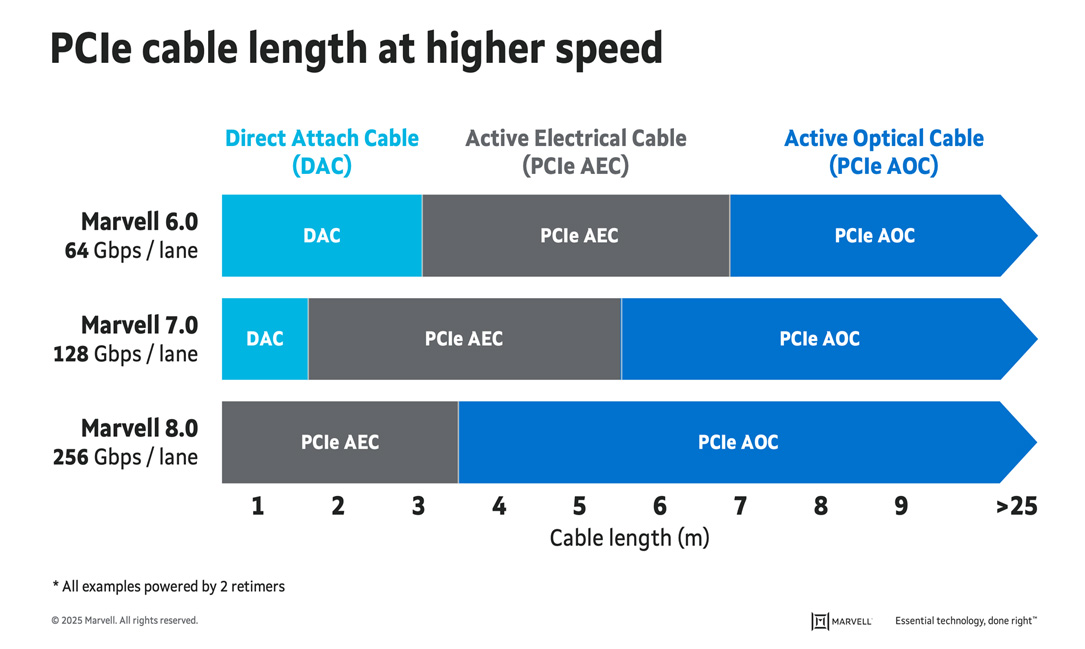

PCIe 6.0 active electrical cables (AECs) can be used for links up to seven meters long while PCIe 6.0 active optical cables (AOCs) can go much further, with the Marvell® Alaska ® P PCIe retimer integrated in them. For reference, AECs are copper cables with digital signal processors (DSPs) integrated at the terminal ends to improve signal integrity. AOCs are optical cables with DSPs, amplifiers and drivers added for greater reach. AOCs go farther, but the extra components mean more power and cost.

AOCs enable links long enough for scale-up servers. PCIe won’t be the only scale-up fabric, but it certainly will be one of the leading choices due to the de facto I/O (input/output) of every component and its low latency nature.

With PCIe 7.0 and 8.0, passive copper cables become more impractical, even for transmitting data within a rack. PCIe-enhanced cables are expected to be adopted more frequently, according to 650 Group.3 .

The above diagram underscores the continued co-existence of copper and optical. With nearly six meters of reach, PCIe 7.0 AEC cables will be able to provide 128 GT/sec bandwidth across a scale-up system, filling one and potentially two racks, and across systems within a rack in PCIe 8.0. Co-existence also means greater choice and flexibility in infrastructure design.

PCIe, meanwhile, will also likely couple with the emerging optical design ideas such as co-packaged optics, near-packaged optics and onboard optics at the later generation.

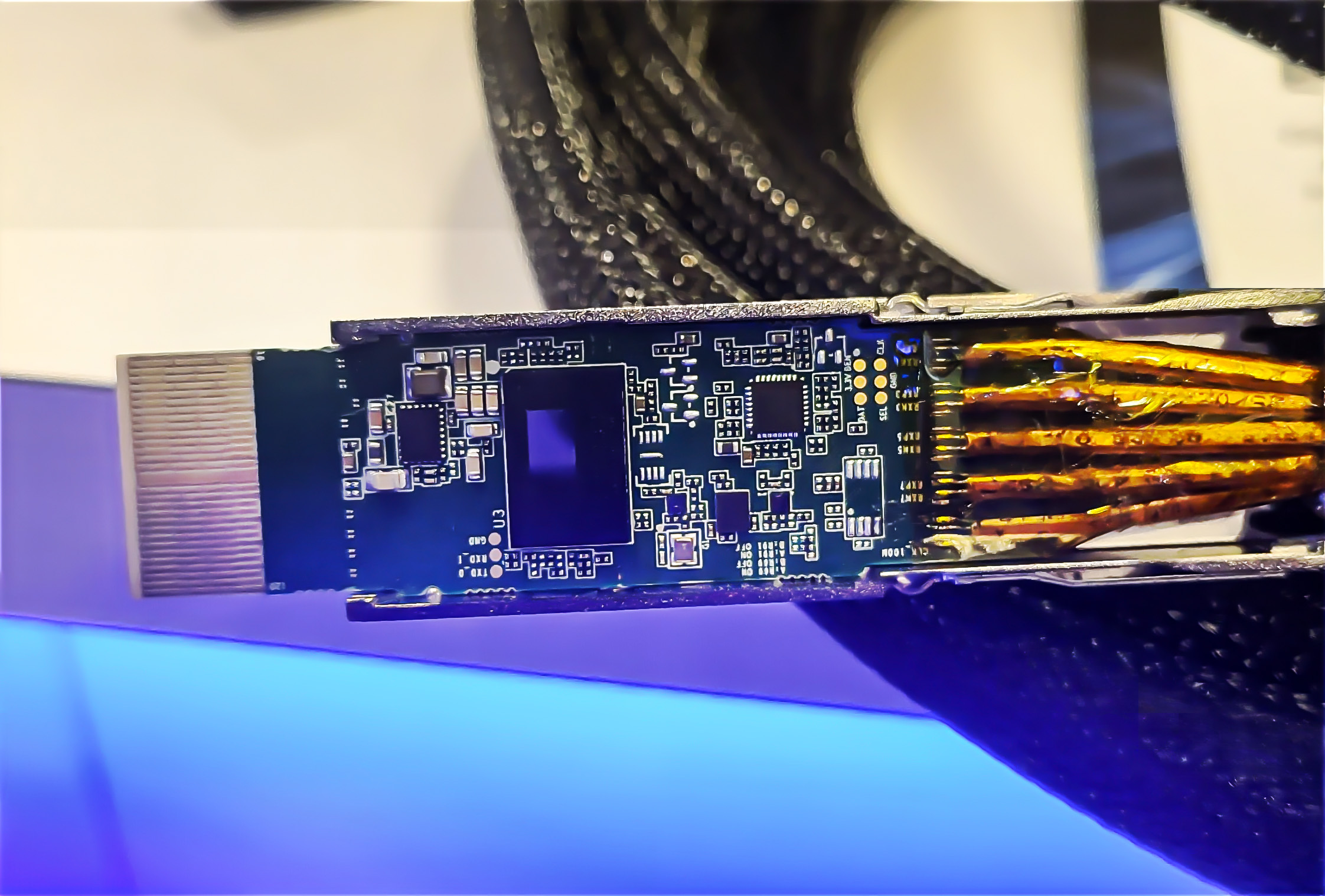

A Marvell PCIe 6.0 retimer (rectangular chip) in a long-distance cable from Luxshare.

It’s the Protocol of Choice for Disaggregation

Modular server designs—where CPUs, NICs and other components sit on independent boards connected by PCIe—are already fairly common within the cloud and enterprise servers. In the next phase of disaggregation, expect to see processor appliances, memory appliances, networking appliances, storage appliances, and other systems linked via PCIe/CXL. Separating component classes into their own appliances can increase equipment utilization, lower costs and potentially improve TCO.

The key to disaggregation is developing the system so that the interconnects don’t impact latency in ways that interfere with overall performance. Latency impact needs to be minimized. PCIe retimers primarily perform signal conditioning, providing a good balance between cable reach and latency. If performance gets degraded, disaggregation likely won’t be worthwhile.

At FMS25, Marvell and Micron put together a live demonstration connecting a host system with a PCIe 6.0 SSD drive through a 2-meter passive cable (DAC) with just a single retimer. The connection to Micron 9650 data center SSD was robust over the three days of the exhibition and continued to perform at line rate SSD performance (128KB sq. read close to 27GB/s). Ashraf Dawood of Marvell shows the demo and shares results in this video.

The Marvell-Micron PCIe 6.0 demo up close: two meters, one retimer, low impact on overall latency.

Next-generation Connections

For two decades, PCIe was largely used at the board level. But through continued development and collaboration across an ecosystem, it has become one of the key technologies for developing scale-up architectures and expanding the use of optical connectivity in computing.

1. PCI-SIG.

2. Tom’s Hardware, May 2019.

3. 650 Group, June 2024.

# # #

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: AI, AI infrastructure, networking for AI training workloads, Networking, data center interconnect, Optical Connectivity, Optical Interconnect

Recent Posts

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

- Improving AI Through CXL Part II: Lower Latency

Archives

Categories

- 5G (10)

- AI (51)

- Cloud (23)

- Coherent DSP (11)

- Company News (108)

- Custom Silicon Solutions (11)

- Data Center (76)

- Data Processing Units (21)

- Enterprise (24)

- ESG (12)

- Ethernet Adapters and Controllers (11)

- Ethernet PHYs (3)

- Ethernet Switching (41)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (45)

- Optical Modules (20)

- Security (6)

- Server Connectivity (36)

- SSD Controllers (6)

- Storage (23)

- Storage Accelerators (4)

- What Makes Marvell (48)

Copyright © 2026 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact