By Nicola Bramante, Senior Principal Engineer, Connectivity Marketing, Marvell

The exponential growth in AI workloads drives new requirements for connectivity in terms of data rate, associated bandwidth and distance, especially for scale-up applications. With direct attach copper (DAC) cables reaching their limits in terms of bandwidth and distance, a new class of cables, active copper cables (ACCs), are coming to market for short-reach links within a data center rack and between racks. Designed for connections up to 2 to 2.5 meters long, ACCs can transmit signals further than traditional passive DAC cables in the 200G/lane fabrics hyperscalers will soon deploy in their rack infrastructures.

At the same time, a 1.6T ACC consumes a relatively miniscule 2.5 watts of power and can be built around fewer and less sophisticated components than longer active electrical cables (AECs) or active optical cables (AOCs). The combination of features gives ACCs a peak mix of bandwidth, power, and cost for server-to-server or server-to-switch connections within the same rack.

Marvell announced its first ACC linear equalizers for producing ACC cables last month.

Inside the Cable

ACCs effectively integrate technology originally developed for the optical realm into copper cables. The idea is to use optical technologies to extend bandwidth, distance and performance while taking advantage of copper’s economics and reliability. Where these ACCs differ is in the components added to them and the way they leverage the technological capabilities of a switch or other device to which they are connected.

ACCs include an equalizer that boosts signals received from the opposite end of the connection. As analog devices, ACC equalizers are relatively inexpensive compared to digital alternatives, consume minimal power and add very little latency.

By Sandeep Bharathi, president, Data Center Group, Marvell

This blog was originally posted at Fortune.

Semiconductors have transformed virtually every aspect of our lives. Now, the semiconductor industry is on the verge of a profound transformation itself.

Customized silicon—chips uniquely tailored to meet the performance and power requirements of an individual customer for a particular use case—will increasingly become pervasive as data center operators and AI developers seek to harness the power of AI. Expanded educational opportunities, better decision making, ways to improve the sustainability of the planet all become possible if we get the computational infrastructure right.

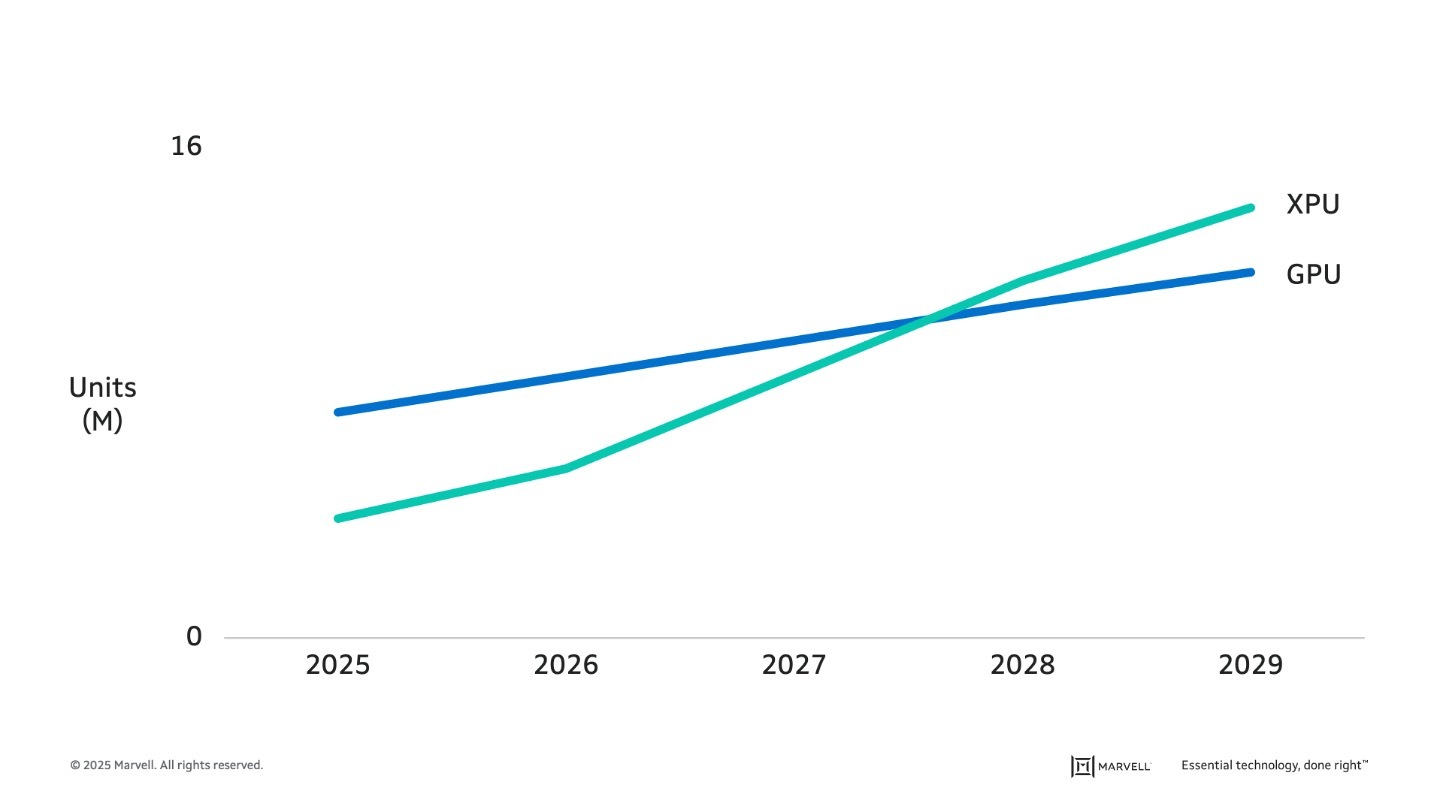

The turn to custom, in fact, is already underway. The number of GPUs—the merchant chips employed for AI training and inference—produced today is nearly double the number of custom XPUs built for the same tasks. By 2028, custom accelerators will likely pass GPUs in units shipped, with the gap expected to grow.1

By Khurram Malik, Senior Director of Marketing, Custom Cloud Solutions, Marvell

Near-memory compute technologies have always been compelling. They can offload tasks from CPUs to boost utilization and revenue opportunities for cloud providers. They can reduce data movement, one of the primary contributors to power consumption,1 while also increasing memory bandwidth for better performance.

They have also only been deployed sporadically; thermal problems, a lack of standards, cost and other issues have prevented many of these ideas giving developers that goldilocks combination of wanted features that will jumpstart commercial adoption.2

This picture is now changing with CXL compute accelerators, which leverage open standards, familiar technologies and a broad ecosystem. And, in a demonstration at OCP 2025, Samsung Electronics, software-defined composable solution provider Liqid, and Marvell showed how CXL accelerators can deliver outsized gains in performance.

The Liqid EX5410C is a demonstration of a CXL memory pooling and sharing appliance capable of scaling up to 20TB of additional memory. Five of the 4RU appliances can then be integrated into a pod for a whopping 100TB of memory and 5.1Tbps of additional memory bandwidth. The CXL fabric is managed by Liqid’s Matrix software that enables real-time and precise memory deployment based on workload requirements:

By Vienna Alexander, Marketing Content Professional, Marvell

Optical connectivity is the backbone of AI servers and an expanding opportunity where Marvell shines, given its comprehensive optical connectivity portfolio.

Marvell showcased its notable developments at ECOC, the European Conference on Optical Communication, alongside various companies contributing to the hardware needed for this AI era.

Learn more about these impactful optical innovations that are enabling AI infrastructure, plus the trends and goings-on of the market.

By Chander Chadha, Director of Marketing, Flash Storage Products, Marvell

AI is all about dichotomies. Distinct computing architectures and processors have been developed for training and inference workloads. In the past two years, scale-up and scale-out networks have emerged.

Soon, the same will happen in storage.

The AI infrastructure need is prompting storage companies to develop SSDs, controllers, NAND and other technologies fine-tuned to support GPUs—with an emphasis on higher IOPS (input/output operations per second) for AI inference—that will be fundamentally different from those for CPU-connected drives where latency and capacity are the bigger focus points. This drive bifurcation also likely won’t be the last; expect to also see drives optimized for training or inference.

As in other technology markets, the changes are being driven by the rapid growth of AI and the equally rapidly growing need to boost the performance, efficiency and TCO of AI infrastructure. The total amount of SSD capacity inside data centers is expected to double to approximately 2 zettabytes by 2028 with the growth primary fueled by AI.1 By that year, SSDs will account for 41% of the installed base of data center drives, up from 25% in 2023.1

Greater storage capacity, however, also potentially means more storage network complexity, latency, and storage management overhead. It also means potentially more power. In 2023, SSDs accounted for 4 terawatt hours of data center power, or around 25% of the 16 TWh consumed by storage. By 2028, SSDs are slated to account for 11TWh, or 50%, of storage’s expected total for the year.1 While storage represents less than five percent of total data power consumption, the total remains large and provides incentives for saving. Reducing storage power by even 1 TWh, or less than 10%, would save enough electricity to power 90,000 US homes for a year.2 Finding the precise balance between capacity, speed, power and cost will be critical for both AI data center operators and customers. Creating different categories of technologies becomes the first step toward optimizing products in a way that will be scalable.