- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

Compute

Networking

- Automotive

- Coherent DSP

- Data Center Switches

- DCI Optical Modules

- Enterprise Switches

- Ethernet Controllers

- Ethernet PHYs

- Linear Driver

- PAM DSP

- PCIe Retimers

- Transimpedance Amplifiers

Storage

Custom

- COMPANY

Our Company

Media

Contact

- SUPPORT

Posts Tagged 'data storage'

-

Fibre Channel: The #1 Choice for Mission-Critical Shared-Storage Connectivity

By Todd Owens, Director, Field Marketing, Marvell

Here at Marvell, we talk frequently to our customers and end users about I/O technology and connectivity. This includes presentations on I/O connectivity at various industry events and delivering training to our OEMs and their channel partners. Often, when discussing the latest innovations in Fibre Channel, audience questions will center around how relevant Fibre Channel (FC) technology is in today’s enterprise data center. This is understandable as there are many in the industry who have been proclaiming the demise of Fibre Channel for several years. However, these claims are often very misguided due to a lack of understanding about the key attributes of FC technology that continue to make it the gold standard for use in mission-critical application environments.

From inception several decades ago, and still today, FC technology is designed to do one thing, and one thing only: provide secure, high-performance, and high-reliability server-to-storage connectivity. While the Fibre Channel industry is made up of a select few vendors, the industry has continued to invest and innovate around how FC products are designed and deployed. This isn’t just limited to doubling bandwidth every couple of years but also includes innovations that improve reliability, manageability, and security.

-

Marvell and Ingrasys Collaborate to Power Ceph Cluster with EBOF in Data Centers

By Khurram Malik, Senior Manager, Technical Marketing, Marvell

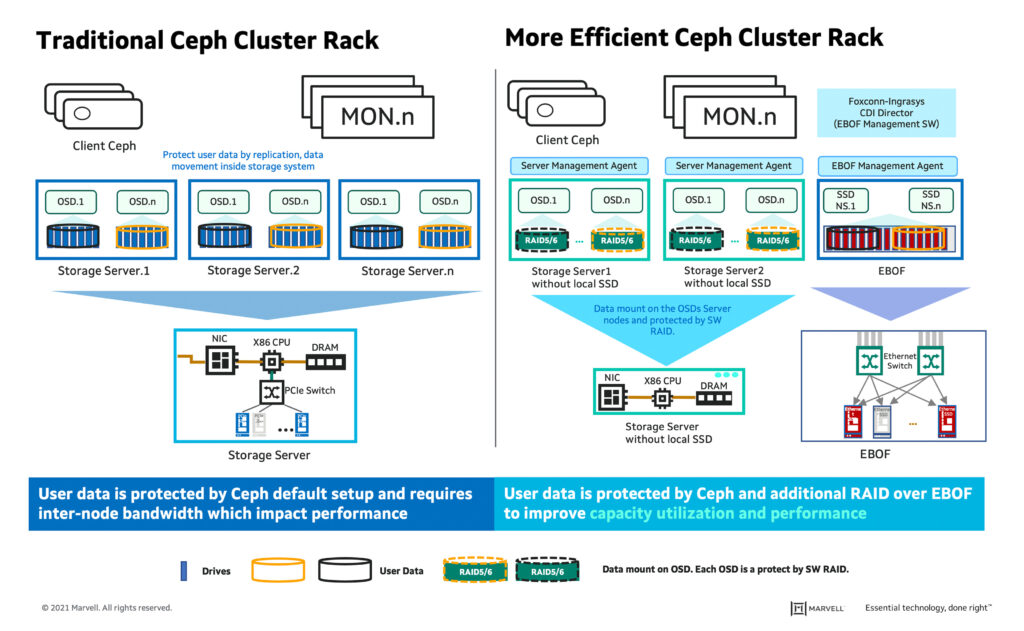

A massive amount of data is being generated at the edge, data center and in the cloud, driving scale out Software-Defined Storage (SDS) which, in turn, is enabling the industry to modernize data centers for large scale deployments. Ceph is an open-source, distributed object storage and massively scalable SDS platform, contributed to by a wide range of major high-performance computing (HPC) and storage vendors. Ceph BlueStore back-end storage removes the Ceph cluster performance bottleneck, allowing users to store objects directly on raw block devices and bypass the file system layer, which is specifically critical in boosting the adoption of NVMe SSDs in the Ceph cluster. Ceph cluster with EBOF provides a scalable, high-performance and cost-optimized solution and is a perfect use case for many HPC applications. Traditional data storage technology leverages special-purpose compute, networking, and storage hardware to optimize performance and requires proprietary software for management and administration. As a result, IT organizations neither scale-out nor make it feasible to deploy petabyte or exabyte data storage from a CAPEX and OPEX perspective.

Ingrasys (subsidiary of Foxconn) is collaborating with Marvell to introduce an Ethernet Bunch of Flash (EBOF) storage solution which truly enables scale-out architecture for data center deployments. EBOF architecture disaggregates storage from compute and provides limitless scalability, better utilization of NVMe SSDs, and deploys single-ported NVMe SSDs in a high-availability configuration on an enclosure level with no single point of failure.

Ceph is deployed on commodity hardware and built on multi-petabyte storage clusters. It is highly flexible due to its distributed nature. EBOF use in a Ceph cluster enables added storage capacity to scale up and scale out at an optimized cost and facilitates high-bandwidth utilization of SSDs. A typical rack-level Ceph solution includes a networking switch for client, and cluster connectivity; a minimum of 3 monitor nodes per cluster for high availability and resiliency; and Object Storage Daemon (OSD) host for data storage, replication, and data recovery operations. Traditionally, Ceph recommends 3 replicas at a minimum to distribute copies of the data and assure that the copies are stored on different storage nodes for replication, but this results in lower usable capacity and consumes higher bandwidth. Another challenge is that data redundancy and replication are compute-intensive and add significant latency. To overcome all these challenges, Ingrasys has introduced a more efficient Ceph cluster rack developed with management software – Ingrasys Composable Disaggregate Infrastructure (CDI) Director.

Recent Posts

Archives

Categories

- 5G (12)

- AI (21)

- Automotive (26)

- Cloud (9)

- Coherent DSP (5)

- Company News (101)

- Custom Silicon Solutions (2)

- Data Center (44)

- Data Processing Units (22)

- Enterprise (25)

- ESG (6)

- Ethernet Adapters and Controllers (12)

- Ethernet PHYs (4)

- Ethernet Switching (36)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (33)

- Optical Modules (12)

- Security (6)

- Server Connectivity (23)

- SSD Controllers (6)

- Storage (22)

- Storage Accelerators (2)

- What Makes Marvell (32)

Copyright © 2024 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact