Archive for the 'Enterprise' Category

-

March 23, 2023

5Gネットワークの安全性は?

By Bill Hagerstrand, Security Solutions BU, Marvell

New Challenges and Solutions in an Open, Disaggregated Cloud-Native World

Time to grab a cup of coffee, as I describe how the transition towards open, disaggregated, and virtualized networks – also known as cloud-native 5G – has created new challenges in an already-heightened 4G-5G security environment.

5G networks move, process and store an ever-increasing amount of sensitive data as a result of faster connection speeds, mission-critical nature of new enterprise, industrial and edge computing/AI applications, and the proliferation of 5G-connected IoT devices and data centers. At the same time, evolving architectures are creating new security threat vectors. The opening of the 5G network edge is driven by O-RAN standards, which disaggregates the radio units (RU), front-haul, mid-haul, and distributed units (DU). Virtualization of the 5G network further disaggregates hardware and software and introduces commodity servers with open-source software running in virtual machines (VM’s) or containers from the DU to the core network.

As a result, these factors have necessitated improvements in 5G security standards that include additional protocols and new security features. But these measures alone, are not enough to secure the 5G network in the cloud-native and quantum computing era. This blog details the growing need for cloud-optimized HSMs (Hardware Security Modules) and their many critical 5G use cases from the device to the core network.

-

February 14, 2023

次世代データセンターがネットワークに求める3つのもの

By Amit Sanyal, Senior Director, Product Marketing, Marvell

Data centers are arguably the most important buildings in the world. Virtually everything we do—from ordinary business transactions to keeping in touch with relatives and friends—is accomplished, or at least assisted, by racks of equipment in large, low-slung facilities.

And whether they know it or not, your family and friends are causing data center operators to spend more money. But it’s for a good cause: it allows your family and friends (and you) to continue their voracious consumption, purchasing and sharing of every kind of content—via the cloud.

Of course, it’s not only the personal habits of your family and friends that are causing operators to spend. The enterprise is equally responsible. They’re collecting data like never before, storing it in data lakes and applying analytics and machine learning tools—both to improve user experience, via recommendations, for example, and to process and analyze that data for economic gain. This is on top of the relentless, expanding adoption of cloud services.

-

January 18, 2023

タイムセンシティブ・ネットワーキングを用いた産業用ネットワークにおけるネットワークの可視化

By Zvi Shmilovici Leib, Distinguished Engineer, Marvell

Industry 4.0 is redefining how industrial networks behave and how they are operated. Industrial networks are mission-critical by nature and have always required timely delivery and deterministic behavior. With Industry 4.0, these networks are becoming artificial intelligence-based, automated and self-healing, as well. As part of this evolution, industrial networks are experiencing the convergence of two previously independent networks: information technology (IT) and operational technology (OT). Time Sensitive Networking (TSN) is facilitating this convergence by enabling the use of Ethernet standards-based deterministic latency to address the needs of both the IT and OT realms.

However, the transition to TSN brings new challenges and requires fresh solutions for industrial network visibility. In this blog, we will focus on the ways in which visibility tools are evolving to address the needs of both IT managers and those who operate the new time-sensitive networks.

Why do we need visibility tools in industrial networks?

Networks are at the heart of the industry 4.0 revolution, ensuring nonstop industrial automation operation. These industrial networks operate 24/7, frequently in remote locations with minimal human presence. The primary users of the industrial network are not humans but, rather, machines that cannot “open tickets.” And, of course, these machines are even more diverse than their human analogs. Each application and each type of machine can be considered a unique user, with different needs and different network “expectations.”

-

November 08, 2022

TSNとプレステラDX1500: ITとOTの溝を越える架け橋

By Reza Eltejaein, Director, Product Marketing, Marvell

Manufacturers, power utilities and other industrial companies stand to gain the most in digital transformation. Manufacturing and construction industries account for 37 percent of total energy used globally*, for instance, more than any other sector. By fine-tuning operations with AI, some manufacturers can reduce carbon emission by up to 20 percent and save millions of dollars in the process.

Industry, however, remains relatively un-digitized and gaps often exist between operational technology – the robots, furnaces and other equipment on factory floors—and the servers and storage systems that make up a company’s IT footprint. Without that linkage, organizations can’t take advantage of Industrial Internet of Things (IIoT) technologies, also referred to as Industry 4.0. Of the 232.6 million pieces of fixed industrial equipment installed in 2020, only 10 percent were IIoT-enabled.

Why the gap? IT often hasn’t been good enough. Plants operate on exacting specifications. Engineers and plant managers need a “live” picture of operations with continual updates on temperature, pressure, power consumption and other variables from hundreds, if not thousands, of devices. Dropped, corrupted or mis-transmitted data can lead to unanticipated downtime—a $50 billion year problem—as well as injuries, blackouts, and even explosions.

To date, getting around these problems has required industrial applications to build around proprietary standards and/or complex component sets. These systems work—and work well—but they are largely cut off from the digital transformation unfolding outside the factory walls.

The new Prestera® DX1500 switch family is aimed squarely at bridging this divide, with Marvell extending its modern borderless enterprise offering into industrial applications. Based on the IEEE 802.1AS-2020 standard for Time-Sensitive Networking (TSN), Prestera DX1500 combines the performance requirements of industry with the economies of scale and pace of innovation of standards-based Ethernet technology. Additionally, we integrated the CPU and the switch—and in some models the PHY—into a single chip to dramatically reduce power, board space and design complexity.

Done right, TSN will lower the CapEx and OpEx for industrial technology, open the door to integrating Industry 4.0 practices and simplify the process of bringing new equipment to market. -

October 05, 2022

エネルギー効率の高いチップの設計

マーベル、ESGグローバルヘッド、レベッカオニールによる

今日はエネルギー効率の日である。 エネルギー、特に当社のチップに電力を供給するために必要な電力消費は、マーベルが最も重視していることである。 私たちの目標は、世代を重ねるごとに製品の消費電力を削減し、設定された機能を実現することである。

当社の製品は、クラウドや企業のデータセンター、5Gキャリアインフラ、自動車、産業用および企業用ネットワーキングにまたがるデータインフラに電力を供給する上で不可欠な役割を果たしている。 製品を設計する際には、新しい機能を提供する革新的な機能に焦点を当てると同時に、性能、容量、セキュリティを向上させ、最終的には製品使用時のエネルギー効率を改善する。

これらの技術革新は、世界のデータインフラをより効率的にし、ひいては気候変動への影響を軽減するのに役立つ。 お客様による当社製品の使用は、マーベルのスコープ3の温室効果ガス排出量に寄与している。

-

August 31, 2020

データセンターのアームプロセッサー

By Raghib Hussain, President, Products and Technologies

Last week, Marvell announced a change in our strategy for ThunderX, our Arm-based server-class processor product line. I’d like to take the opportunity to put some more context around that announcement, and our future plans in the data center market.

ThunderX is a product line that we started at Cavium, prior to our merger with Marvell in 2018. At Cavium, we had built many generations of successful processors for infrastructure applications, including our Nitrox security processor and OCTEON infrastructure processor. These processors have been deployed in the world’s most demanding data-plane applications such as firewalls, routers, SSL-acceleration, cellular base stations, and Smart NICs. Today, OCTEON is the most scalable and widely deployed multicore processor in the market. -

July 28, 2020

ネットワークエッジでの生活 セキュリティ

By Alik Fishman, Director of Product Management, Marvell

In our series Living on the Network Edge, we have looked at the trends driving Intelligence, Performance and Telemetry to the network edge. In this installment, let’s look at the changing role of network security and the ways integrating security capabilities in network access can assist in effectively streamlining policy enforcement, protection, and remediation across the infrastructure.

Cybersecurity threats are now a daily struggle for businesses experiencing a huge increase in hacked and breached data from sources increasingly common in the workplace like mobile and IoT devices. Not only are the number of security breaches going up, they are also increasing in severity and duration, with the average lifecycle from breach to containment lasting nearly a year1 and presenting expensive operational challenges. With the digital transformation and emerging technology landscape (remote access, cloud-native models, proliferation of IoT devices, etc.) dramatically impacting networking architectures and operations, new security risks are introduced. To address this, enterprise infrastructure is on the verge of a remarkable change, elevating network intelligence, performance, visibility and security2.

-

July 23, 2020

テレメトリー: エッジが見えるか?

By Suresh Ravindran, Senior Director, Software Engineering

So far in our series Living on the Network Edge, we have looked at trends driving Intelligence and Performance to the network edge. In this blog, let’s look into the need for visibility into the network.

As automation trends evolve, the number of connected devices is seeing explosive growth. IDC estimates that there will be 41.6 billion connected IoT devices generating a whopping 79.4 zettabytes of data in 20251. A significant portion of this traffic will be video flows and sensor traffic which will need to be intelligently processed for applications such as personalized user services, inventory management, intrusion prevention and load balancing across a hybrid cloud model. Networking devices will need to be equipped with the ability to intelligently manage processing resources to efficiently handle huge amounts of data flows.

-

July 16, 2020

エッジでのスピードの必要性

寄稿 ジョージ ハーベイ, マーベルセミコンダクタ社 主任アーキテクト

In the previous TIPS to Living on the Edge, we looked at the trend of driving network intelligence to the edge. With the capacity enabled by the latest wireless networks, like 5G, the infrastructure will enable the development of innovative applications. These applications often employ a high-frequency activity model, for example video or sensors, where the activities are often initiated by the devices themselves generating massive amounts of data moving across the network infrastructure. Cisco’s VNI Forecast Highlights predicts that global business mobile data traffic will grow six-fold from 2017 to 2022, or at an annual growth rate of 42 percent1, requiring a performance upgrade of the network.

-

July 08, 2020

ネットワーク・インテリジェンスとプロセッシングをエッジへ

寄稿 ジョージ ハーベイ, マーベルセミコンダクタ社 主任アーキテクト

The mobile phone has become such an essential part of our lives as we move towards more advanced stages of the “always on, always connected” model. Our phones provide instant access to data and communication mediums, and that access influences the decisions we make and ultimately, our behavior.

According to Cisco, global mobile networks will support more than 12 billion mobile devices and IoT connections by 2022.1 And these mobile devices will support a variety of functions. Already, our phones replace gadgets and enable services. Why carry around a wallet when your phone can provide Apple Pay, Google Pay or make an electronic payment? Who needs to carry car keys when your phone can unlock and start your car or open your garage door? Applications now also include live streaming services that enable VR/AR experiences and sharing in real time. While future services and applications seem unlimited to the imagination, they require next-generation data infrastructure to support and facilitate them. -

June 07, 2018

多機能な新しいイーサネットスイッチが複数の業界セクターに同時に対応

By Ran Gu, Marketing Director of Switching Product Line, Marvell

Due to ongoing technological progression and underlying market dynamics, Gigabit Ethernet (GbE) technology with 10 Gigabit uplink speeds is starting to proliferate into the networking infrastructure across a multitude of different applications where elevated levels of connectivity are needed: SMB switch hardware, industrial switching hardware, SOHO routers, enterprise gateways and uCPEs, to name a few. The new Marvell® Link Street™ 88E6393X, which has a broad array of functionality, scalability and cost-effectiveness, provides a compelling switch IC solution with the scope to serve multiple industry sectors.

The 88E6393X switch IC incorporates both 1000BASE-T PHY and 10 Gbps fiber port capabilities, while requiring only 60% of the power budget necessitated by competing solutions. Despite its compact package, this new switch IC offers 8 triple speed (10/100/1000) Ethernet ports, plus 3 XFI/SFI ports, and has a built-in 200 MHz microprocessor. Its SFI support means that the switch can connect to a fiber module without the need to include an external PHY - thereby saving space and bill-of-materials (BoM) costs, as well as simplifying the design. It complies with the IEEE 802.1BR port extension standard and can also play a pivotal role in lowering the management overhead and keeping operational expenditures (OPEX) in check. In addition, it includes L3 routing support for IP forwarding purposes.

Adherence to the latest time sensitive networking (TSN) protocols (such as 802.1AS, 802.1Qat, 802.1Qav and 802.1Qbv) enables delivery of the low latency operation mandated by industrial networks. The 256 entry ternary content-addressable memory (TCAM) allows for real-time, deep packet inspection (DPI) and policing of the data content being transported over the network (with access control and policy control lists being referenced). The denial of service (DoS) prevention mechanism is able to detect illegal packets and mitigate the security threat of DoS attacks.

Adherence to the latest time sensitive networking (TSN) protocols (such as 802.1AS, 802.1Qat, 802.1Qav and 802.1Qbv) enables delivery of the low latency operation mandated by industrial networks. The 256 entry ternary content-addressable memory (TCAM) allows for real-time, deep packet inspection (DPI) and policing of the data content being transported over the network (with access control and policy control lists being referenced). The denial of service (DoS) prevention mechanism is able to detect illegal packets and mitigate the security threat of DoS attacks. The 88E6393X device, working in conjunction with a high performance ARMADA® network processing system-on-chip (SoC), can offload some of the packet processing activities so that the CPU’s bandwidth can be better focused on higher level activities. Data integrity is upheld, thanks to the quality of service (QoS) support across 8 traffic classes. In addition, the switch IC presents a scalable solution. The 10 Gbps interfaces provide non-blocking uplink to make it possible to cascade several units together, thus creating higher port count switches (16, 24, etc.).

This new product release features a combination of small footprint, lower power consumption, extensive security and inherent flexibility to bring a highly effective switch IC solution for the SMB, enterprise, industrial and uCPE space.

-

April 02, 2018

現在のネットワーク・テレメトリ要件の理解

By Tal Mizrahi, Feature Definition Architect, Marvell

There have, in recent years, been fundamental changes to the way in which networks are implemented, as data demands have necessitated a wider breadth of functionality and elevated degrees of operational performance. Accompanying all this is a greater need for accurate measurement of such performance benchmarks in real time, plus in-depth analysis in order to identify and subsequently resolve any underlying issues before they escalate.

The rapidly accelerating speeds and rising levels of complexity that are being exhibited by today’s data networks mean that monitoring activities of this kind are becoming increasingly difficult to execute. Consequently more sophisticated and inherently flexible telemetry mechanisms are now being mandated, particularly for data center and enterprise networks.

A broad spectrum of different options are available when looking to extract telemetry material, whether that be passive monitoring, active measurement, or a hybrid approach. An increasingly common practice is the piggy-backing of telemetry information onto the data packets that are passing through the network. This tactic is being utilized within both in-situ OAM (IOAM) and in-band network telemetry (INT), as well as in an alternate marking performance measurement (AM-PM) context.

At Marvell, our approach is to provide a diverse and versatile toolset through which a wide variety of telemetry approaches can be implemented, rather than being confined to a specific measurement protocol. To learn more about this subject, including longstanding passive and active measurement protocols, and the latest hybrid-based telemetry methodologies, please view the video below and download our white paper.

WHITE PAPER, Network Telemetry Solutions for Data Center and Enterprise Networks

-

January 11, 2018

世界中のデータを保管します

By Marvell PR Team

Storage is the foundation for a data-centric world, but how tomorrow’s data will be stored is the subject of much debate. What is clear is that data growth will continue to rise significantly. According to a report compiled by IDC titled ‘Data Age 2025’, the amount of data created will grow at an almost exponential rate. This amount is predicted to surpass 163 Zettabytes by the middle of the next decade (which is almost 8 times what it is today, and nearly 100 times what it was back in 2010). Increasing use of cloud-based services, the widespread roll-out of Internet of Things (IoT) nodes, virtual/augmented reality applications, autonomous vehicles, machine learning and the whole ‘Big Data’ phenomena will all play a part in the new data-driven era that lies ahead.

Further down the line, the building of smart cities will lead to an additional ramp up in data levels, with highly sophisticated infrastructure being deployed in order to alleviate traffic congestion, make utilities more efficient, and improve the environment, to name a few. A very large proportion of the data of the future will need to be accessed in real-time. This will have implications on the technology utilized and also where the stored data is situated within the network. Additionally, there are serious security considerations that need to be factored in, too.

So that data centers and commercial enterprises can keep overhead under control and make operations as efficient as possible, they will look to follow a tiered storage approach, using the most appropriate storage media so as to lower the related costs. Decisions on the media utilized will be based on how frequently the stored data needs to be accessed and the acceptable degree of latency. This will require the use of numerous different technologies to make it fully economically viable - with cost and performance being important factors.

There are now a wide variety of different storage media options out there. In some cases these are long established while in others they are still in the process of emerging. Hard disk drives (HDDs) in certain applications are being replaced by solid state drives (SSDs), and with the migration from SATA to NVMe in the SSD space, NVMe is enabling the full performance capabilities of SSD technology. HDD capacities are continuing to increase substantially and their overall cost effectiveness also adds to their appeal. The immense data storage requirements that are being warranted by the cloud mean that HDD is witnessing considerable traction in this space.

There are other forms of memory on the horizon that will help to address the challenges that increasing storage demands will set. These range from higher capacity 3D stacked flash to completely new technologies, such as phase-change with its rapid write times and extensive operational lifespan. The advent of NVMe over fabrics (NVMf) based interfaces offers the prospect of high bandwidth, ultra-low latency SSD data storage that is at the same time extremely scalable.

Marvell was quick to recognize the ever growing importance of data storage and has continued to make this sector a major focus moving forwards, and has established itself as the industry’s leading supplier of both HDD controllers and merchant SSD controllers.

Within a period of only 18 months after its release, Marvell managed to ship over 50 million of its 88SS1074 SATA SSD controllers with NANDEdge™ error-correction technology. Thanks to its award-winning 88NV11xx series of small form factor DRAM-less SSD controllers (based on a 28nm CMOS semiconductor process), the company is able to offer the market high performance NVMe memory controller solutions that are optimized for incorporation into compact, streamlined handheld computing equipment, such as tablet PCs and ultra-books. These controllers are capable of supporting reads speeds of 1600MB/s, while only drawing minimal power from the available battery reserves. Marvell offers solutions like its 88SS1092 NVMe SSD controller designed for new compute models that enable the data center to share storage data to further maximize cost and performance efficiencies.

The unprecedented growth in data means that more storage will be required. Emerging applications and innovative technologies will drive new ways of increasing storage capacity, improving latency and ensuring security. Marvell is in a position to offer the industry a wide range of technologies to support data storage requirements, addressing both SSD or HDD implementation and covering all accompanying interface types from SAS and SATA through to PCIe and NMVe.

Check out www.marvell.com to learn more about how Marvell is storing the world’s data.

Check out www.marvell.com to learn more about how Marvell is storing the world’s data. -

November 06, 2017

The USR-Alliance – Enabling an Open Multi-Chip Module (MCM) Ecosystem

By Gidi Navon, Senior Principal Architect, Marvell

The semiconductor industry is witnessing exponential growth and rapid changes to its bandwidth requirements, as well as increasing design complexity, emergence of new processes and integration of multi-disciplinary technologies. All this is happening against a backdrop of shorter development cycles and fierce competition. Other technology-driven industry sectors, such as software and hardware, are addressing similar challenges by creating open alliances and open standards. This blog does not attempt to list all the open alliances that now exist -- the Open Compute Project, Open Data Path and the Linux Foundation are just a few of the most prominent examples. One technological area that still hasn’t embraced such open collaboration is Multi-Chip-Module (MCM), where multiple semiconductor dies are packaged together, thereby creating a combined system in a single package.

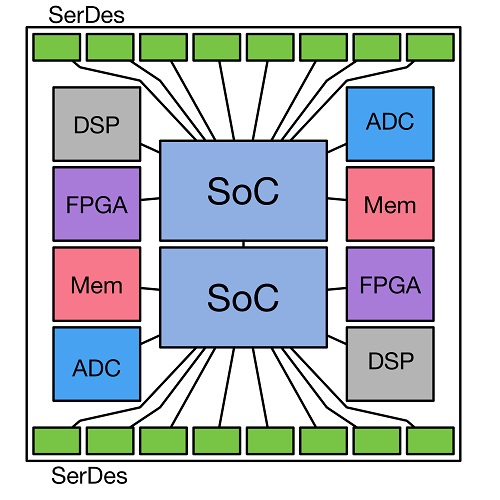

The semiconductor industry is witnessing exponential growth and rapid changes to its bandwidth requirements, as well as increasing design complexity, emergence of new processes and integration of multi-disciplinary technologies. All this is happening against a backdrop of shorter development cycles and fierce competition. Other technology-driven industry sectors, such as software and hardware, are addressing similar challenges by creating open alliances and open standards. This blog does not attempt to list all the open alliances that now exist -- the Open Compute Project, Open Data Path and the Linux Foundation are just a few of the most prominent examples. One technological area that still hasn’t embraced such open collaboration is Multi-Chip-Module (MCM), where multiple semiconductor dies are packaged together, thereby creating a combined system in a single package. The MCM concept has been around for a while, generating multiple technological and market benefits, including:

- Improved yield - Instead of creating large monolithic dies with low yield and higher cost (which sometimes cannot even be fabricated), splitting the silicon into multiple die can significantly improve the yield of each building block and the combined solution. Better yield consequently translates into reductions in costs.

- Optimized process - The final MCM product is a mix-and-match of units in different fabrication processes which enables optimizing of the process selection for specific IP blocks with similar characteristics.

- Multiple fabrication plants - Different fabs, each with its own unique capabilities, can be utilized to create a given product.

- Product variety - New products are easily created by combining different numbers and types of devices to form innovative and cost‑optimized MCMs.

- Short product cycle time - Dies can be upgraded independently, which promotes ease in the addition of new product capabilities and/or the ability to correct any issues within a given die. For example, integrating a new type of I/O interface can be achieved without having to re-spin other parts of the solution that are stable and don’t require any change (thus avoiding waste of time and money).

- Economy of scale - Each die can be reused in multiple applications and products, increasing its volume and yield as well as the overall return on the initial investment made in its development.

Sub-dividing large semiconductor devices and mounting them on an MCM has now become the new printed circuit board (PCB) - providing smaller footprint, lower power, higher performance and expanded functionality.

Now, imagine that the benefits listed above are not confined to a single chip vendor, but instead are shared across the industry as a whole. By opening and standardizing the interface between dies, it is possible to introduce a true open platform, wherein design teams in different companies, each specializing in different technological areas, are able to create a variety of new products beyond the scope of any single company in isolation.

This is where the USR Alliance comes into action. The alliance has defined an Ultra Short Reach (USR) link, optimized for communication across the very short distances between the components contained in a single package. This link provides high bandwidth with less power and smaller die size than existing very short reach (VSR) PHYs which cross package boundaries and connectors and need to deal with challenges that simply don’t exist inside a package. The USR PHY is based on a multi-wire differential signaling technique optimized for MCM environments.

There are many applications in which the USR link can be implemented. Examples include CPUs, switches and routers, FPGAs, DSPs, analog components and a variety of long reach electrical and optical interfaces.

図 1:Example of a possible MCM layout

図 1:Example of a possible MCM layout Marvell is an active promoter member of the USR Alliance and is working to create an ecosystem of interoperable components, interconnects, protocols and software that will help the semiconductor industry bring more value to the market. The alliance is working on creating PHY, MAC and software standards and interoperability agreements in collaboration with the industry and other standards development organizations, and is promoting the development of a full ecosystem around USR applications (including certification programs) to ensure widespread interoperability.

To learn more about the USR Alliance visit: www.usr-alliance.org

-

2017年10月19日

Wi-Fi 20周年のお祝い – パートIII

By Prabhu Loganathan, Senior Director of Marketing for Connectivity Business Unit, Marvell

Standardized in 1997, Wi-Fi has changed the way that we compute. Today, almost every one of us uses a Wi-Fi connection on a daily basis, whether it's for watching a show on a tablet at home, using our laptops at work, or even transferring photos from a camera. Millions of Wi-Fi-enabled products are being shipped each week, and it seems this technology is constantly finding its way into new device categories.

Since its humble beginnings, Wi-Fi has progressed at a rapid pace. While the initial standard allowed for just 2 Mbit/s data rates, today's Wi-Fi implementations allow for speeds in the order of Gigabits to be supported. This last in our three part blog series covering the history of Wi-Fi will look at what is next for the wireless standard.

Gigabit Wireless

The latest 802.11 wireless technology to be adopted at scale is 802.11ac. It extends 802.11n, enabling improvements specifically in the 5.8 GHz band, with 802.11n technology used in the 2.4 GHz band for backwards compatibility.By sticking to the 5.8 GHz band, 802.11ac is able to benefit from a huge 160 Hz channel bandwidth which would be impossible in the already crowded 2.4 GHz band. In addition, beamforming and support for up to 8 MIMO streams raises the speeds that can be supported. Depending on configuration, data rates can range from a minimum of 433 Mbit/s to multiple Gigabits in cases where both the router and the end-user device have multiple antennas.

If that's not fast enough, the even more cutting edge 802.11ad standard (which is now starting to appear on the market) uses 60 GHz ‘millimeter wave’ frequencies to achieve data rates up to 7 Gbit/s, even without MIMO propagation. The major catch with this is that at 60 GHz frequencies, wireless range and penetration are greatly reduced.

Looking Ahead

Now that we've achieved Gigabit speeds, what's next? Besides high speeds, the IEEE 802.11 working group has recognized that low speed, power efficient communication is in fact also an area with a great deal of potential for growth. While Wi-Fi has traditionally been a relatively power-hungry standard, the upcoming protocols will have attributes that will allow it to target areas like the Internet of Things (IoT) market with much more energy efficient communication.20 Years and Counting

Although it has been around for two whole decades as a standard, Wi-Fi has managed to constantly evolve and keep up with the times. From the dial-up era to broadband adoption, to smartphones and now as we enter the early stages of IoT, Wi-Fi has kept on developing new technologies to adapt to the needs of the market. If history can be used to give us any indication, then it seems certain that Wi-Fi will remain with us for many years to come. -

October 11, 2017

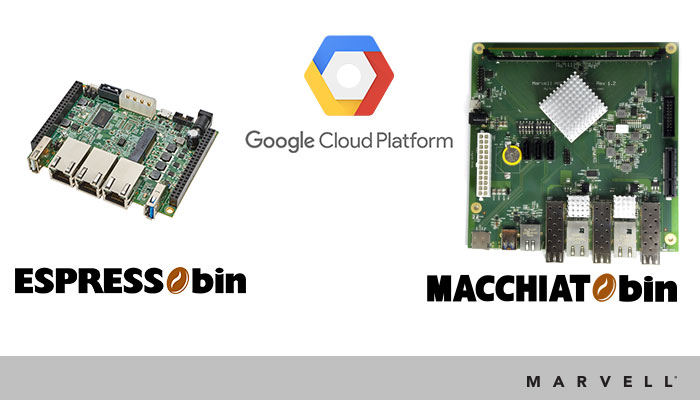

ESPRESSObin および MACCHIATObin コミュニティ・プラットフォームで Google Cloud IoT Core パブリックベータ版をサポートすることで、IoT の知能によって企業の強みをさらに強化

By Aviad Enav Zagha, Sr. Director Embedded Processors Product Line Manager, Networking Group, Marvell

Though the projections made by market analysts still differ to a considerable degree, there is little doubt about the huge future potential that implementation of Internet of Things (IoT) technology has within an enterprise context. It is destined to lead to billions of connected devices being in operation, all sending captured data back to the cloud, from which analysis can be undertaken or actions initiated. This will make existing business/industrial/metrology processes more streamlined and allow a variety of new services to be delivered.

Though the projections made by market analysts still differ to a considerable degree, there is little doubt about the huge future potential that implementation of Internet of Things (IoT) technology has within an enterprise context. It is destined to lead to billions of connected devices being in operation, all sending captured data back to the cloud, from which analysis can be undertaken or actions initiated. This will make existing business/industrial/metrology processes more streamlined and allow a variety of new services to be delivered. With large numbers of IoT devices to deal with in any given enterprise network, the challenges of efficiently and economically managing them all without any latency issues, and ensuring that elevated levels of security are upheld, are going to prove daunting. In order to put the least possible strain on cloud-based resources, we believe the best approach is to divest some intelligence outside the core and place it at the enterprise edge, rather than following a purely centralized model. This arrangement places computing functionality much nearer to where the data is being acquired and makes a response to it considerably easier. IoT devices will then have a local edge hub that can reduce the overhead of real-time communication over the network. Rather than relying on cloud servers far away from the connected devices to take care of the ‘heavy lifting’, these activities can be done closer to home. Deterministic operation is maintained due to lower latency, bandwidth is conserved (thus saving money), and the likelihood of data corruption or security breaches is dramatically reduced.

Sensors and data collectors in the enterprise, industrial and smart city segments are expected to generate more than 1GB per day of information, some needing a response within a matter of seconds. Therefore, in order for the network to accommodate the large amount of data, computing functionalities will migrate from the cloud to the network edge, forming a new market of edge computing.

In order to accelerate the widespread propagation of IoT technology within the enterprise environment, Marvell now supports the multifaceted Google Cloud IoT Core platform. Cloud IoT Core is a fully managed service mechanism through which the management and secure connection of devices can be accomplished on the large scales that will be characteristic of most IoT deployments.

Through its IoT enterprise edge gateway technology, Marvell is able to provide the necessary networking and compute capabilities required (as well as the prospect of localized storage) to act as mediator between the connected devices within the network and the related cloud functions. By providing the control element needed, as well as collecting real-time data from IoT devices, the IoT enterprise gateway technology serves as a key consolidation point for interfacing with the cloud and also has the ability to temporarily control managed devices if an event occurs that makes cloud services unavailable. In addition, the IoT enterprise gateway can perform the role of a proxy manager for lightweight, rudimentary IoT devices that (in order to keep power consumption and unit cost down) may not possess any intelligence. Through the introduction of advanced ARM®-based community platforms, Marvell is able to facilitate enterprise implementations using Cloud IoT Core. The recently announced Marvell MACCHIATObin™ and Marvell ESPRESSObin™ community boards support open source applications, local storage and networking facilities. At the heart of each of these boards is Marvell’s high performance ARMADA® system-on-chip (SoC) that supports Google Cloud IoT Core Public Beta.

Via Cloud IoT Core, along with other related Google Cloud services (including Pub/Sub, Dataflow, Bigtable, BigQuery, Data Studio), enterprises can benefit from an all-encompassing IoT solution that addresses the collection, processing, evaluation and visualization of real-time data in a highly efficient manner. Cloud IoT Core features certificate-based authentication and transport layer security (TLS), plus an array of sophisticated analytical functions.

Over time, the enterprise edge is going to become more intelligent. Consequently, mediation between IoT devices and the cloud will be needed, as will cost-effective processing and management. With the combination of Marvell’s proprietary IoT gateway technology and Google Cloud IoT Core, it is now possible to migrate a portion of network intelligence to the enterprise edge, leading to various major operational advantages.

Please visit MACCHIATObin Wiki and ESPRESSObin Wiki for instructions on how to connect to Google’s Cloud IoT Core Public Beta platform.

-

August 31, 2017

ハードウェア暗号化によって組込み型ストレージを保護

By Jeroen Dorgelo, Director of Strategy, Storage Group, Marvell

For industrial, military and a multitude of modern business applications, data security is of course incredibly important. While software based encryption often works well for consumer and some enterprise environments, in the context of the embedded systems used in industrial and military applications, something that is of a simpler nature and is intrinsically more robust is usually going to be needed.

Self encrypting drives utilize on-board cryptographic processors to secure data at the drive level. This not only increases drive security automatically, but does so transparently to the user and host operating system. By automatically encrypting data in the background, they thus provide the simple to use, resilient data security that is required by embedded systems.

Embedded vs Enterprise Data Security

Both embedded and enterprise storage often require strong data security. Depending on the industry sectors involved this is often related to the securing of customer (or possibly patient) privacy, military data or business data. However that is where the similarities end. Embedded storage is often used in completely different ways from enterprise storage, thereby leading to distinctly different approaches to how data security is addressed.

Enterprise storage usually consists of racks of networked disk arrays in a data center, while embedded storage is often simply a solid state drive (SSD) installed into an embedded computer or device. The physical security of the data center can be controlled by the enterprise, and software access control to enterprise networks (or applications) is also usually implemented. Embedded devices, on the other hand - such as tablets, industrial computers, smartphones, or medical devices - are often used in the field, in what are comparatively unsecure environments. Data security in this context has no choice but to be implemented down at the device level.

Hardware Based Full Disk Encryption

For embedded applications where access control is far from guaranteed, it is all about securing the data as automatically and transparently as possible.

Full disk, hardware based encryption has shown itself to be the best way of achieving this goal. Full disk encryption (FDE) achieves high degrees of both security and transparency by encrypting everything on a drive automatically. Whereas file based encryption requires users to choose files or folders to encrypt, and also calls for them to provide passwords or keys to decrypt them, FDE works completely transparently. All data written to the drive is encrypted, yet, once authenticated, a user can access the drive as easily as an unencrypted one. This not only makes FDE much easier to use, but also means that it is a more reliable method of encryption, as all data is automatically secured. Files that the user forgets to encrypt or doesn’t have access to (such as hidden files, temporary files and swap space) are all nonetheless automatically secured.

While FDE can be achieved through software techniques, hardware based FDE performs better, and is inherently more secure. Hardware based FDE is implemented at the drive level, in the form of a self encrypting SSD. The SSD controller contains a hardware cryptographic engine, and also stores private keys on the drive itself.

Because software based FDE relies on the host processor to perform encryption, it is usually slower - whereas hardware based FDE has much lower overhead as it can take advantage of the drive’s integrated crypto-processor. Hardware based FDE is also able to encrypt the master boot record of the drive, which conversely software based encryption is unable to do.

Hardware centric FDEs are transparent to not only the user, but also the host operating system. They work transparently in the background and no special software is needed to run them. Besides helping to maximize ease of use, this also means sensitive encryption keys are kept separate from the host operating system and memory, as all private keys are stored on the drive itself.

Improving Data Security

Besides providing the transparent, easy to use encryption that is now being sought, hardware- based FDE also has specific benefits for data security in modern SSDs. NAND cells have a finite service life and modern SSDs use advanced wear leveling algorithms to extend this as much as possible. Instead of overwriting the NAND cells as data is updated, write operations are constantly moved around a drive, often resulting in multiple copies of a piece of data being spread across an SSD as a file is updated. This wear leveling technique is extremely effective, but it makes file based encryption and data erasure much more difficult to accomplish, as there are now multiple copies of data to encrypt or erase.

FDE solves both these encryption and erasure issues for SSDs. Since all data is encrypted, there are not any concerns about the presence of unencrypted data remnants. In addition, since the encryption method used (which is generally 256-bit AES) is extremely secure, erasing the drive is as simple to do as erasing the private keys.

Solving Embedded Data Security

Embedded devices often present considerable security challenges to IT departments, as these devices are often used in uncontrolled environments, possibly by unauthorized personnel. Whereas enterprise IT has the authority to implement enterprise wide data security policies and access control, it is usually much harder to implement these techniques for embedded devices situated in industrial environments or used out in the field.

The simple solution for data security in embedded applications of this kind is hardware based FDE. Self encrypting drives with hardware crypto-processors have minimal processing overhead and operate completely in the background, transparent to both users and host operating systems. Their ease of use also translates into improved security, as administrators do not need to rely on users to implement security policies, and private keys are never exposed to software or operating systems.

-

July 17, 2017

イーサネット規模の最適化

寄稿 ジョージ ハーベイ, マーベルセミコンダクタ社 主任アーキテクト

Implementation of cloud infrastructure is occurring at a phenomenal rate, outpacing Moore's Law. Annual growth is believed to be 30x and as much 100x in some cases. In order to keep up, cloud data centers are having to scale out massively, with hundreds, or even thousands of servers becoming a common sight.

At this scale, networking becomes a serious challenge. More and more switches are required, thereby increasing capital costs, as well as management complexity. To tackle the rising expense issues, network disaggregation has become an increasingly popular approach. By separating the switch hardware from the software that runs on it, vendor lock-in is reduced or even eliminated. OEM hardware could be used with software developed in-house, or from third party vendors, so that cost savings can be realized.

Though network disaggregation has tackled the immediate problem of hefty capital expenditures, it must be recognized that operating expenditures are still high. The number of managed switches basically stays the same. To reduce operating costs, the issue of network complexity has to also be tackled.

Network Disaggregation

Almost every application we use today, whether at home or in the work environment, connects to the cloud in some way. Our email providers, mobile apps, company websites, virtualized desktops and servers, all run on servers in the cloud.

For these cloud service providers, this incredible growth has been both a blessing and a challenge. As demand increases, Moore's law has struggled to keep up. Scaling data centers today involves scaling out - buying more compute and storage capacity, and subsequently investing in the networking to connect it all. The cost and complexity of managing everything can quickly add up.

Until recently, networking hardware and software had often been tied together. Buying a switch, router or firewall from one vendor would require you to run their software on it as well. Larger cloud service providers saw an opportunity. These players often had no shortage of skilled software engineers. At the massive scales they ran at, they found that buying commodity networking hardware and then running their own software on it would save them a great deal in terms of Capex.

This disaggregation of the software from the hardware may have been financially attractive, however it did nothing to address the complexity of the network infrastructure. There was still a great deal of room to optimize further.

802.1BR

Today's cloud data centers rely on a layered architecture, often in a fat-tree or leaf-spine structural arrangement. Rows of racks, each with top-of-rack (ToR) switches, are then connected to upstream switches on the network spine. The ToR switches are, in fact, performing simple aggregation of network traffic. Using relatively complex, energy consuming switches for this task results in a significant capital expense, as well as management costs and no shortage of headaches.

Through the port extension approach, outlined within the IEEE 802.1BR standard, the aim has been to streamline this architecture. By replacing ToR switches with port extenders, port connectivity is extended directly from the rack to the upstream. Management is consolidated to the fewer number of switches which are located at the upper layer network spine, eliminating the dozens or possibly hundreds of switches at the rack level.

The reduction in switch management complexity of the port extender approach has been widely recognized, and various network switches on the market now comply with the 802.1BR standard. However, not all the benefits of this standard have actually been realized.

The Next Step in Network Disaggregation

Though many of the port extenders on the market today fulfill 802.1BR functionality, they do so using legacy components. Instead of being optimized for 802.1BR itself, they rely on traditional switches. This, as a consequence impacts upon the potential cost and power benefits that the new architecture offers.

Designed from the ground up for 802.1BR, Marvell's Passive Intelligent Port Extender (PIPE) offering is specifically optimized for this architecture. PIPE is interoperable with 802.1BR compliant upstream bridge switches from all the industry’s leading OEMs. It enables fan-less, cost efficient port extenders to be deployed, which thereby provide upfront savings as well as ongoing operational savings for cloud data centers. Power consumption is lowered and switch management complexity is reduced by an order of magnitude

The first wave in network disaggregation was separating switch software from the hardware that it ran on. 802.1BR's port extender architecture is bringing about the second wave, where ports are decoupled from the switches which manage them. The modular approach to networking discussed here will result in lower costs, reduced energy consumption and greatly simplified network management.

-

June 21, 2017

従来のインフラストラクチャーの更なる活用

By Ron Cates

The flexibility offered by wireless networking is revolutionizing the enterprise space. High-speed Wi-Fi®, provided by standards such as IEEE 802.11ac and 802.11ax, makes it possible to deliver next-generation services and applications to users in the office, no matter where they are working. However, the higher wireless speeds involved are putting pressure on the cabling infrastructure that supports the Wi-Fi access points around an office environment. The 1 Gbit/s Ethernet was more than adequate for older wireless standards and applications. Now, with greater reliance on the new generation of Wi-Fi access points and their higher uplink rate speeds, the older infrastructure is starting to show strain. At the same time, in the server room itself, demand for high-speed storage and faster virtualized servers is placing pressure on the performance levels offered by the core Ethernet cabling that connects these systems together and to the wider enterprise infrastructure. One option is to upgrade to a 10 Gbit/s Ethernet infrastructure. But this is a migration that can be prohibitively expensive. The Cat 5e cabling that exists in many office and industrial environments is not designed to cope with such elevated speeds. To make use of 10 Gbit/s equipment, that old cabling needs to come out and be replaced by a new copper infrastructure based on Cat 6a standards. Cat 6a cabling can support 10 Gbit/s Ethernet at the full range of 100 meters, and you would be lucky to run 10 Gbit/s at half that distance over a Cat 5e cable. In contrast to data-center environments that are designed to cope easily with both server and networking infrastructure upgrades, enterprise cabling lying in ducts, in ceilings and below floors is hard to reach and swap out. This is especially true if you want to keep the business running while the switchover takes place. Help is at hand with the emergence of the IEEE 802.3bz™ and NBASE-T® set of standards and the transceiver technology that goes with them. 802.3bz and NBASE-T make it possible to transmit at speeds of 2.5 Gbit/s or 5 Gbit/s across conventional Cat 5e or Cat 6 at distances up to the full 100 meters. The transceiver technology leverages advances in digital signal processing (DSP) to make these higher speeds possible without demanding a change in the cabling infrastructure. The NBASE-T technology, a companion to the IEEE 802.3bz standard, incorporates novel features such as downshift, which responds dynamically to interference from other sources in the cable bundle. The result is lower speed. But the downshift technology has the advantage that it does not cut off communication unexpectedly, providing time to diagnose the problem interferer in the bundle and perhaps reroute it to sit alongside less sensitive cables that may carry lower-speed signals. This is where the new generation of high-density transceivers come in. There are now transceivers coming onto the market that support data rates all the way from legacy 10 Mbit/s Ethernet up to the full 5 Gbit/s of 802.3bz/NBASE-T - and will auto-negotiate the most appropriate data rate with the downstream device. This makes it easy for enterprise users to upgrade the routers and switches that support their core network without demanding upgrades to all the client devices. Further features, such as Virtual Cable Tester® functionality, makes it easier to diagnose faults in the cabling infrastructure without resorting to the use of specialized network instrumentation. Transceivers and PHYs designed for switches can now support eight 802.3bz/NBASE-T ports in one chip, thanks to the integration made possible by leading-edge processes. These transceivers are designed not only to be more cost-effective, they also consume far less power and PCB real estate than PHYs that were designed for 10 Gbit/s networks. This means they present a much more optimized solution with numerous benefits from a financial, thermal and a logistical perspective. The result is a networking standard that meshes well with the needs of modern enterprise networks - and lets that network and the equipment evolve at its own pace. -

2017 年 5 月 31 日

ワイヤレス・オフィスのさらなる強化

By Yaron Zimmerman, Senior Staff Product Line Manager, Marvell

In order to benefit from the greater convenience offered for employees and more straightforward implementation, office environments are steadily migrating towards wholesale wireless connectivity. Thanks to this, office staff will no longer be limited by where there are cables/ports available, resulting in a much higher degree of mobility. It will mean that they can remain constantly connected and their work activities won’t be hindered - whether they are at their desk, in a meeting or even in the cafeteria. This will make enterprises much better aligned with our modern working culture - where hot desking and bring your own device (BYOD) are becoming increasingly commonplace.

The main dynamic which is going to be responsible for accelerating this trend will be the emergence of 802.11ac Wave 2 Wi-Fi technology. With the prospect of exploiting Gigabit data rates (thereby enabling the streaming of video content, faster download speeds, higher quality video conferencing, etc.), it is clearly going to have considerable appeal. In addition, this protocol offers extended range and greater bandwidth through multi-user MIMO operation - so that a larger number of users can be supported simultaneously. This will be advantageous to the enterprise, as less access points per users will be required.

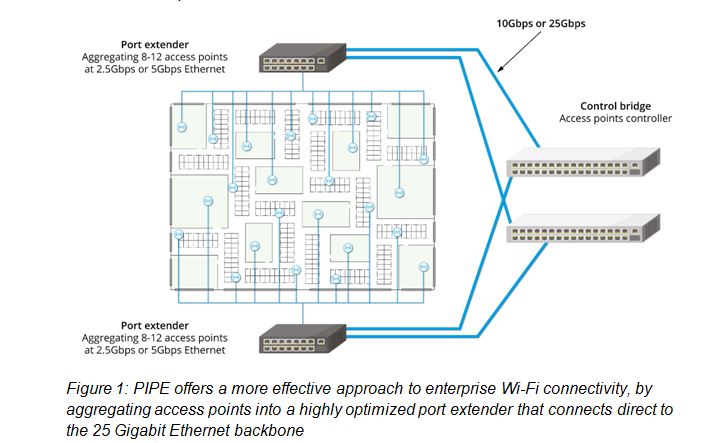

An example of the office floorplan for an enterprise/campus is described in Figure 1 (showing a large number of cubicles and also some meeting rooms too). Though scenarios vary, generally speaking an enterprise/campus is likely to occupy a total floor space of between 20,000 and 45,000 square feet. With one 802.11ac access point able to cover an area of 3000 to 4000 square feet, a wireless office would need a total of about 8 to 12 access points to be fully effective. This density should be more than acceptable for average voice and data needs. Supporting these access points will be a high capacity wireline backbone.

Increasingly, rather than employing traditional 10 Gigabit Ethernet infrastructure, the enterprise/campus backbone is going to be based on 25 Gigabit Ethernet technology. It is expected that this will see widespread uptake in newly constructed office buildings over the next 2-3 years as the related optics continue to become more affordable. Clearly enterprises want to tap into the enhanced performance offered by 802.11ac, but they have to do this while also adhering to stringent budgetary constraints too. As the data capacity at the backbone gets raised upwards, so will the complexity of the hierarchical structure that needs to be placed underneath it, consisting of extensive intermediary switching technology. Well that’s what conventional thinking would tell us.

Before embarking on a 25 Gigabit Ethernet/802.11ac implementation, enterprises have to be fully aware of what all this entails. As well as the initial investment associated with the hardware heavy arrangement just outlined, there is also the ongoing operational costs to consider. By aggregating the access points into a port extender that is then connecting directly to the 25 Gigabit Ethernet backbone instead towards a central control bridge switch, it is possible to significantly simplify the hierarchical structure - effectively eliminating a layer of unneeded complexity from the system.

Through its Passive Intelligent Port Extender (PIPE) technology Marvell is doing just that. This product offering is unique to the market, as other port extenders currently available were not originally designed for that purpose and therefore exhibit compromises in their performance, price and power. PIPE is, in contrast, an optimized solution that is able to fully leverage the IEEE 802.1BR bridge port extension standard - dispensing with the need for expensive intermediary switches between the control bridge and the access point level and reducing the roll-out costs as a result. It delivers markedly higher throughput, as the aggregating of multiple 802.11ac access points to 10 Gigabit Ethernet switches has been avoided. With fewer network elements to manage, there is some reduction in terms of the ongoing running costs too.

PIPE means that enterprises can future proof their office data communication infrastructure - starting with 10 Gigabit Ethernet, then upgrading to a 25 Gigabit Ethernet when it is needed. The number of ports that it incorporates are a good match for the number of access points that an enterprise/campus will need to address the wireless connectivity demands of their work force. It enables dual homing functionality, so that elevated service reliability and resiliency are both assured through system redundancy. In addition, supporting Power-over-Ethernet (PoE), allows access points to connect to both a power supply and the data network through a single cable - further facilitating the deployment process.

-

2017年4月27日

11ac Wave 2と11axでの Wi-Fi 開発での挑戦 2.5GBASE-Tおよび5GBASE-Tへのコスト効率の良いアップグレード方法

ニック・イリヤディス著

The Insatiable Need for Bandwidth: Standards Trying to Keep Up

With the push for more and more Wi-Fi bandwidth, the WLAN industry, its standards committees and the Ethernet switch manufacturers are having a hard time keeping up with the need for more speed. As the industry prepares for upgrading to 802.11ac Wave 2 and the promise of 11ax, the ability of Ethernet over existing copper wiring to meet the increased transfer speeds is being challenged. And what really can’t keep up are the budgets that would be needed to physically rewire the millions of miles of cabling in the world today.

The Latest on the Latest Wireless Networking Standards: IEEE 802.11ac Wave 2 and 802.11ax

The latest 802.11ac IEEE standard is now in Wave 2. According to Webopedia’s definition: the 802.11ac -2013 update, or 802.11ac Wave 2, is an addendum to the original 802.11ac wireless specification that utilizes Multi-User, Multiple-Input, Multiple-Output (MU-MIMO) technology and other advancements to help increase theoretical maximum wireless speeds from 3.47 gigabits-per-second (Gbps), in the original spec, to 6.93 Gbps in 802.11ac Wave 2. The original 802.11ac spec itself served as a performance boost over the 802.11n specification that preceded it, increasing wireless speeds by up to 3x. As with the initial specification, 802.11ac Wave 2 also provides backward compatibility with previous 802.11 specs, including 802.11n.

IEEE has also noted that in the past two decades, the IEEE 802.11 wireless local area networks (WLANs) have also experienced tremendous growth with the proliferation of IEEE 802.11 devices, as a major Internet access for mobile computing. Therefore, the IEEE 802.11ax specification is under development as well. Giving equal time to Wikipedia, its definition of 802.11ax is: a type of WLAN designed to improve overall spectral efficiency in dense deployment scenarios, with a predicted top speed of around 10 Gbps. It works in 2.4GHz or 5GHz and in addition to MIMO and MU-MIMO, it introduces Orthogonal Frequency-Division Multiple Access (OFDMA) technique to improve spectral efficiency and also higher order 1024 Quadrature Amplitude Modulation (QAM) modulation support for better throughputs. Though the nominal data rate is just 37 percent higher compared to 802.11ac, the new amendment will allow a 4X increase of user throughput. This new specification is due to be publicly released in 2019.

Faster “Cats” Cat 5, 5e, 6, 6e and on

And yes, even cabling is moving up to keep up. You’ve got Cat 5, 5e, 6, 6e and 7 (search: Differences between CAT5, CAT5e, CAT6 and CAT6e Cables for specifics), but suffice it to say, each iteration is capable of moving more data faster, starting with the ubiquitous Cat 5 at 100Mbps at 100MHz over 100 meters of cabling to Cat 6e reaching 10,000 Mbps at 500MHz over 100 meters. Cat 7 can operate at 600MHz over 100 meters, with more “Cats” on the way. All of this of course, is to keep up with streaming, communications, mega data or anything else being thrown at the network.

How to Keep Up Cost-Effectively with 2.5BASE-T and 5BASE-T

What this all boils down to is this: no matter how fast the network standards or cables get, the migration to new technologies will always be balanced with the cost of attaining those speeds and technologies in the physical realm. In other words, balancing the physical labor costs associated to upgrade all those millions of miles of cabling in buildings throughout the world, as well as the switches or other access points. The labor costs alone, are a reason why companies often seek out to stay in the wiring closet as long as possible, where the physical layer (PHY) devices, such access and switches, remain easier and more cost effective to switch out, than replacing existing cabling.

This is where Marvell steps in with a whole solution. Marvell’s products, including the Avastar wireless products, Alaska PHYs and Prestera switches, provide an optimized solution that will help support up to 2.5 and 5.0 Gbps speeds, using existing cabling. For example, the Marvell Avastar 88W8997 wireless processor was the industry's first 28nm, 11ac (wave-2), 2x2 MU-MIMO combo with full support for Bluetooth 4.2, and future BT5.0. To address switching, Marvell created the Marvell® Prestera® DX family of packet processors, which enables secure, high-density and intelligent 10GbE/2.5GbE/1GbE switching solutions at the access/edge and aggregation layers of Campus, Industrial, Small Medium Business (SMB) and Service Provider networks. And finally, the Marvell Alaska family of Ethernet transceivers are PHY devices which feature the industry's lowest power, highest performance and smallest form factor.

These transceivers help optimize form factors, as well as multiple port and cable options, with efficient power consumption and simple plug-and-play functionality to offer the most advanced and complete PHY products to the broadband market to support 2.5G and 5G data rate over Cat5e and Cat6 cables.

You mean, I don’t have to leave the wiring closet?

The longer changes can be made at the wiring closet vs. the electricians and cabling needed to rewire, the better companies can balance faster throughput at lower cost. The Marvell Avastar, Prestera and Alaska product families are ways to help address the upgrade to 2.5G- and 5GBASE-T over existing copper wire to keep up with that insatiable demand for throughput, without taking you out of the wiring closet. See you inside!

# # #

-

January 13, 2017

マーベルと Mythware 社が小中学校に「教室のクラウド」を導入

By Yong Luo

Recently, Marvell and local China customer Nanjing Mythware Information Technology Co., Ltd. (Mythware), cooperated to create a brand new wireless network interactive teaching tool –the Mythware Classroom Cloud. Compact and exquisitely designed, this wireless network teaching solution is the first brand new educational hardware product based on Mythware’s more than 10 years of experience in education informatization and multimedia audio and video technologies. The introduction of the Mythware Classroom Cloud, as well as its supplementary interactive classroom software, effectively solves some common wireless network equipment-related teaching application challenges, such as instability, frequent dropping offline and data transmission errors. Thus, the innovative Interactive teaching can be successfully carried out wirelessly. This not only enhances the efficiency of teaching, but also brings new vigor and vitality into primary and secondary classrooms.

The introduction of the Mythware Classroom Cloud, as well as its supplementary interactive classroom software, effectively solves some common wireless network equipment-related teaching application challenges, such as instability, frequent dropping offline and data transmission errors. Thus, the innovative Interactive teaching can be successfully carried out wirelessly. This not only enhances the efficiency of teaching, but also brings new vigor and vitality into primary and secondary classrooms.

The Mythware Classroom Cloud incorporates a complete set of Marvell high-performance Wi-Fi enterprise-class wireless solutions, offering 2.4G and 5G operating frequency bands, and wireless throughput of up to 1900Mbit/s. The solution includes a dual-core 1.6GHz CPU – the ARMADA® 385. It also uses Marvell’s Avastar® 88W8864 – an 802.11ac 4X4 Wi-Fi chip. And, last but not least, the unit boasts a four-port Gigabit Ethernet transceiver, Marvell’s Alaska® 88E1543. Marvell's solutions have been widely used in the Cisco enterprise cloud and Linksys high-end routers.

The Marvell ARMADA 385 CPU chip, with super data processing and computing capability, is built into the Mythware Classroom Cloud. It provides strong protection for sending and receiving large-capacity cloud files in the classroom. The CPU also provides abundant interfaces, so you can connect hard drives directly through the SATA 3.0 interface, which helps the Mythware Classroom Cloud to support up to 8TB of storage.

This is especially important for schools with poor network conditions. Teachers can upload resources such as courseware to the Classroom Cloud’s hard drives before school time, and call on the resources directly in class. That enables students to enjoy multimedia teaching resources immediately and without interruption, effectively solving problems caused by inaccessible networks or limited network bandwidth. The Mythware Classroom Cloud enables full real-time interconnection between teacher's and student's end devices, sending and receiving documents, arranging homework and accessing teaching resources in real time, without needing them to be forwarded by campus servers.

The Mythware Classroom Cloud incorporates a complete set of Marvell high-performance Wi-Fi enterprise-class wireless solutions, offering 2.4G and 5G operating frequency bands, and wireless throughput of up to 1900Mbit/s. The solution includes a dual-core 1.6GHz CPU – the ARMADA® 385. It also uses Marvell’s Avastar® 88W8864 – an 802.11ac 4X4 Wi-Fi chip. And, last but not least, the unit boasts a four-port Gigabit Ethernet transceiver, Marvell’s Alaska® 88E1543. Marvell's solutions have been widely used in the Cisco enterprise cloud and Linksys high-end routers.

The Marvell ARMADA 385 CPU chip, with super data processing and computing capability, is built into the Mythware Classroom Cloud. It provides strong protection for sending and receiving large-capacity cloud files in the classroom. The CPU also provides abundant interfaces, so you can connect hard drives directly through the SATA 3.0 interface, which helps the Mythware Classroom Cloud to support up to 8TB of storage.

This is especially important for schools with poor network conditions. Teachers can upload resources such as courseware to the Classroom Cloud’s hard drives before school time, and call on the resources directly in class. That enables students to enjoy multimedia teaching resources immediately and without interruption, effectively solving problems caused by inaccessible networks or limited network bandwidth. The Mythware Classroom Cloud enables full real-time interconnection between teacher's and student's end devices, sending and receiving documents, arranging homework and accessing teaching resources in real time, without needing them to be forwarded by campus servers.

One of the biggest highlights of the Mythware Classroom Cloud is that its lightweight body contains "wireless" super energy. Marvell’s Avastar 88W8864 802.11ac 4X4 wireless chip significantly improves the bandwidth utilization, as well as further upgrading data transmission capacity and reliability. It also provides trusted network support for a variety of multimedia file transmission in the wireless network teaching environment, ensuring the stability of classroom interactions.

One of its outstanding features is that teachers can use the wireless network in the classroom to send high-definition video (8Mbit/s) to more than 60+ mobile terminal devices with different operating systems, completely in sync and without delay. At the same time, teachers no longer have to worry about screen-pausing problems when broadcasting a PPT screen or demonstrating 3D graphic models.

And, when teachers use some interactive features (such as group teaching, sharing the whiteboard, initiating discussion and quick answer, survey and evaluations) during the teaching process, the problems of intermittent playback and the network dropping offline are solved.

One of the biggest highlights of the Mythware Classroom Cloud is that its lightweight body contains "wireless" super energy. Marvell’s Avastar 88W8864 802.11ac 4X4 wireless chip significantly improves the bandwidth utilization, as well as further upgrading data transmission capacity and reliability. It also provides trusted network support for a variety of multimedia file transmission in the wireless network teaching environment, ensuring the stability of classroom interactions.

One of its outstanding features is that teachers can use the wireless network in the classroom to send high-definition video (8Mbit/s) to more than 60+ mobile terminal devices with different operating systems, completely in sync and without delay. At the same time, teachers no longer have to worry about screen-pausing problems when broadcasting a PPT screen or demonstrating 3D graphic models.

And, when teachers use some interactive features (such as group teaching, sharing the whiteboard, initiating discussion and quick answer, survey and evaluations) during the teaching process, the problems of intermittent playback and the network dropping offline are solved.

In addition, the Alaska 88E15433 Ethernet transceiver chip mounted in the Marvell solution provides stable and reliable Gigabit Ethernet connections, and Marvell’s 88PG877 power management chip provides voltage stability for the Mythware Classroom Cloud. It also supports flexible power supply modes: local AC and 802.3af PoE powering. With its special rotary chuck design, the Mythware Classroom Cloud equipment can be easily installed on classroom walls or ceilings.

In addition, the Alaska 88E15433 Ethernet transceiver chip mounted in the Marvell solution provides stable and reliable Gigabit Ethernet connections, and Marvell’s 88PG877 power management chip provides voltage stability for the Mythware Classroom Cloud. It also supports flexible power supply modes: local AC and 802.3af PoE powering. With its special rotary chuck design, the Mythware Classroom Cloud equipment can be easily installed on classroom walls or ceilings.

Mythware was founded in 2007, and for the past 10 years its main business has been educational software. Its core product – classroom interactive system software – enjoys a market share of up to 95% in China. It also supports up to 24 different languages, and is exported to over 60 countries and regions. Domestic and global users now exceed 31 million. By 2017, Mythware plans to fully transform into an integrated hardware and software supplier, focusing on intelligent hardware, big data, cloud platform, and will continue to release a large number of new hardware products and solutions. New opportunities for cooperation between Marvell and Mythware will continue to emerge.

Mythware was founded in 2007, and for the past 10 years its main business has been educational software. Its core product – classroom interactive system software – enjoys a market share of up to 95% in China. It also supports up to 24 different languages, and is exported to over 60 countries and regions. Domestic and global users now exceed 31 million. By 2017, Mythware plans to fully transform into an integrated hardware and software supplier, focusing on intelligent hardware, big data, cloud platform, and will continue to release a large number of new hardware products and solutions. New opportunities for cooperation between Marvell and Mythware will continue to emerge.

-

January 09, 2017

イーサネットにまつわるダーウィン説: 適者生存 (高速な製品が生き残る)

By Michael Zimmerman, Vice President and General Manager, CSIBU, Marvell

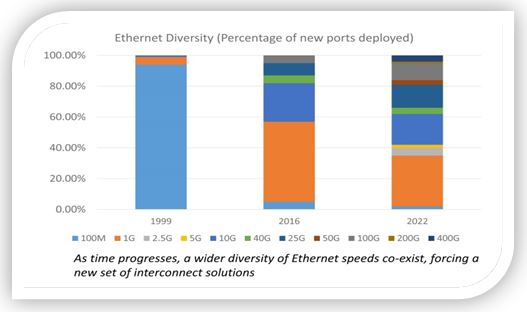

The most notable metric of Ethernet technology is the raw speed of communications. Ethernet has taken off in a meaningful way with 10BASE-T, which was used ubiquitously across many segments. With the introduction of 100BASE-T, the massive 10BASE-T installed base was replaced, showing a clear Darwinism effect of the fittest (fastest) displacing the prior and older generation. However, when 1000BASE-T (GbE – Gigabit Ethernet) was introduced, contrary to industry experts’ predictions, it did not fully displace 100BASE-T, and the two speeds have co-existed for a long time (more than 10 years). In fact, 100BASE-T is still being deployed in many applications. The introduction — and slow ramp — of 10GBASE-T has not impacted the growth of GbE, and it is only recently that GbE ports began consistently growing year over year. This trend signaled a new evolution paradigm of Ethernet: the new doesn’t replace the old, and the co-existence of multi variants is the general rule. The introduction of 40GbE and 25GbE augmented the wide diversity of Ethernet speeds, and although 25GbE was rumored to displace 40GbE, it is expected that 40GbE ports will still be deployed over the next 10 years1.

Hence, a new market reality evolved: there is less of a cannibalizing effect (i.e. newer speed cannibalizing the old), and more co-existence of multiple variants. This new diversity will require a set of solutions which allow effective support for multiple speed interconnect. Two critical capabilities will be needed:

Hence, a new market reality evolved: there is less of a cannibalizing effect (i.e. newer speed cannibalizing the old), and more co-existence of multiple variants. This new diversity will require a set of solutions which allow effective support for multiple speed interconnect. Two critical capabilities will be needed:経済的に少数のポートへとスケールダウンする能力2

Support of multiple Ethernet speeds

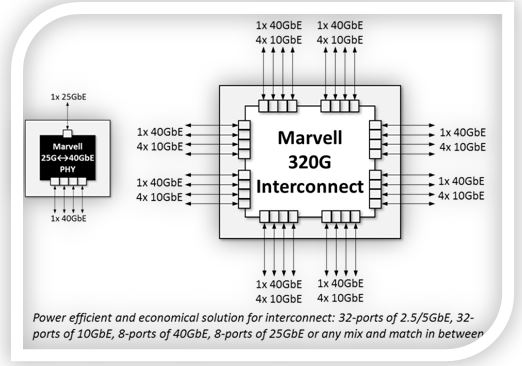

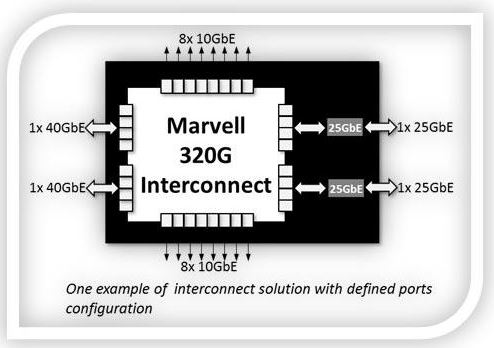

Marvell launched a new set of Ethernet interconnect solutions that meet this evolution pattern. The first products in the family are the Prestera® 98DX83xx 320G interconnect switch, and the Alaska® 88X5113 25G/40G Gearbox PHY. The 98DX83xx switch fans-out up to 32-ports of 10GbE or 8-ports of 40GbE, in economical 24x20mm package, with power of less than 0.5Watt/10G port.

The 88X5113 Gearbox converts a single port of 40GbE to 25GbE. The combination of the two devices creates unique connectivity configurations for a myriad of Ethernet speeds, and most importantly enables scale down to a few ports. While data center- scale 25GbE switches have been widely available for 64-ports, 128-ports (and beyond), a new underserved market segment evolved for a lower port count of 25GbE and 40GbE. Marvell has addressed this space with the new interconnect solution, allowing customers to configure any number of ports to different speeds, while keeping the power envelope to sub-20Watt, and a fraction of the hardware/thermal footprint of comparable data center solutions. The optimal solution to serve low port count connectivity of 10GbE, 25GbE, and 40GbE is now well addressed by Marvell. Samples and development boards with SDK are ready, with the option of a complete package of application software.

The 88X5113 Gearbox converts a single port of 40GbE to 25GbE. The combination of the two devices creates unique connectivity configurations for a myriad of Ethernet speeds, and most importantly enables scale down to a few ports. While data center- scale 25GbE switches have been widely available for 64-ports, 128-ports (and beyond), a new underserved market segment evolved for a lower port count of 25GbE and 40GbE. Marvell has addressed this space with the new interconnect solution, allowing customers to configure any number of ports to different speeds, while keeping the power envelope to sub-20Watt, and a fraction of the hardware/thermal footprint of comparable data center solutions. The optimal solution to serve low port count connectivity of 10GbE, 25GbE, and 40GbE is now well addressed by Marvell. Samples and development boards with SDK are ready, with the option of a complete package of application software.

In the mega data center market, there is cannibalization effect of former Ethernet speeds, and mass migration to higher speeds. However, in the broader market which includes private data centers, enterprise, carriers, multiple Ethernet speeds co-exist in many use cases.

Ethernet switches with high port density of 10GbE and 25GbE are generally available. However, these solutions do not scale down well to sub-24 ports, where there is pent-up demand for devices as proposed here by Marvell.

-

May 01, 2016

マーベル、Linux Foundation プロジェクトでエンタープライズグレードのネットワークオペレーティングシステムをサポート

By Yaniv Kopelman, Networking and Connectivity CTO, Marvell

As organizations continue to invest in data centers to host a variety of applications, more demands are placed on the network infrastructure. The deployment of white boxes is an approach organizations can take to meet their networking needs. White box switches are a “blank” standard hardware that relies on a network operating system, commonly Linux-based. White box switches coupled with Open Network Operating Systems enable organizations to customize the networking features that support their objectives and streamline operations to fit their business.

Marvell is committed to powering the key technologies in the data center and the enterprise network, which is why we are proud to be a contributor to the OpenSwitch Project by The Linux Foundation. Built to run on Linux and open hardware, OpenSwitch is a full-featured network operating system (NOS) aimed at enabling the transition to disaggregated networks. OpenSwitch allows for freedom of innovation while maintaining stability and limiting vulnerability, and has a reliable architecture focused on modularity and availability. The open source OpenSwitch NOS allows developers to build networks that prioritize business-critical workloads and functions, and removes the burdens of interoperability issues and complex licensing structures that are inherent in proprietary systems.

As a provider of switches and PHYs for data center and campus networking markets, Marvell believes that the open source OpenSwitch NOS will help the deployment of white boxes in the data center and campus networks. Developers will be able to build networks that prioritize business-critical workloads and functions, removing the burdens of interoperability issues and complex licensing structures that are inherent in proprietary systems.